At KL we’re always at it. Improving ourselves, that is. Our research, our development, our products, our partnerships, our… yes – all that. But for us all to keep improving – and in the right direction – we all need to work toward one overarching goal, or mission. Enter the mission statement…

Ours is saving the world from cyber-menaces of all types. But how well do we do this? After all, a lot, if not all AV vendors have similar mission statements. So what we and – more importantly – the user needs to know is precisely how well we perform in fulfilling our mission – compared to all the rest…

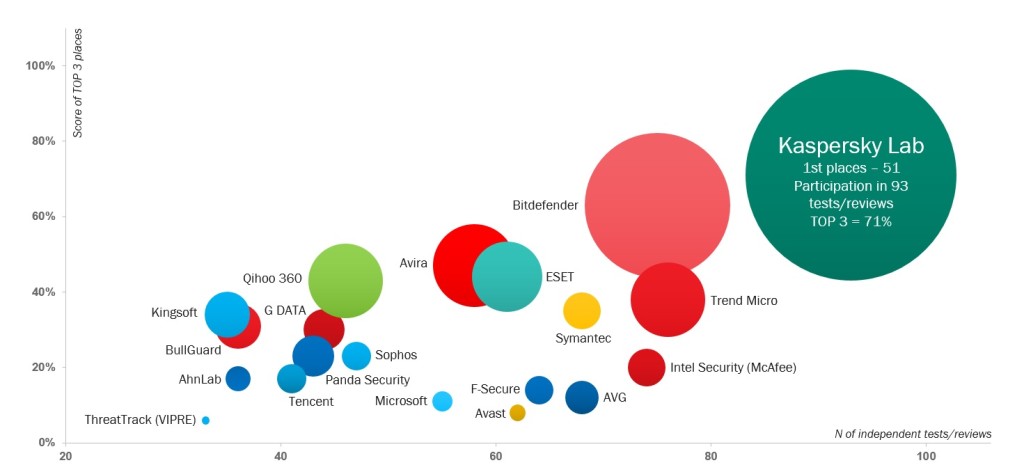

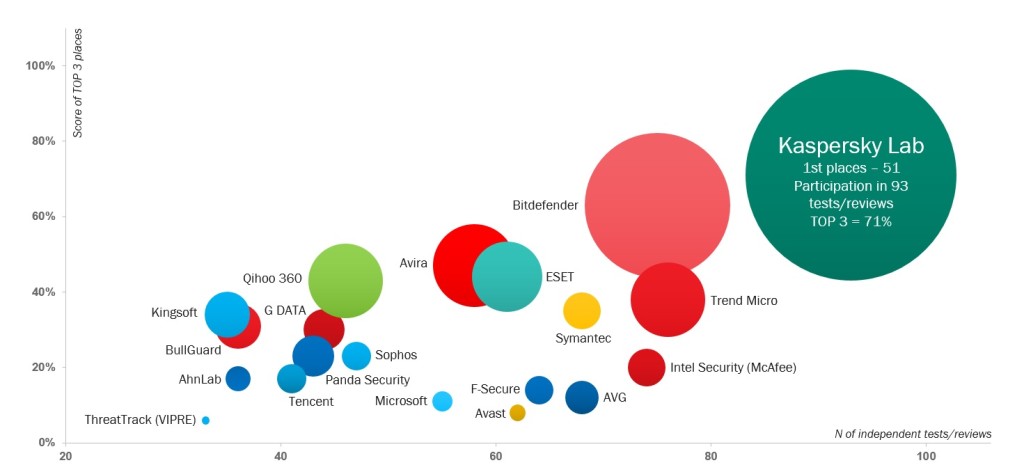

To do this, various metrics are used. And one of the most important is the expert testing of the quality of products and technologies by different independent testing labs. It’s simple really: the better the result on this or that – or all – criteria, the better our tech is at combatting cyber-disease – to objectively better save the world :).

Thing is, out of all the hundreds of tests by the many independent testing centers around the world, which should be used? I mean, how can all the data be sorted and refined to leave hard, meaningful – and easy to understand and compare – results? There’s also the problem of there being not only hundreds of testing labs but also hundreds of AV vendors so, again, how can it all be sieved – to remove the chaff from the wheat and to then compare just the best wheat? There’s one more problem (it’s actually not that complex, I promise – you’ll see:) – that of biased or selective test results, which don’t give the full picture – the stuff of advertising and marketing since year dot.

Well guess what. Some years back we devised the following simple formula for accessible, accurate, honest AV evaluation: the Top-3 Rating Matrix!.

So how’s it work?

First, we need to make sure we include the results of all well-known and respected, fully independent test labs in their comparative anti-malware protection investigations over the given period of time.

Second, we need to include all the different types of tests of the chosen key testers – and on all participating vendors.

Third, we need to take into account (i) the total number of tests in which each vendor took part; (ii) the % of ‘gold medals’; and (iii) the % of top-3 places.

What we get is simplicity, transparency, meaningful sifting, and no skewed ‘test marketing’ (alas, there is such a thing). Of course it would be possible to add into the matrix another, say, 25,000 parameters – just for that extra 0.025% of objectivity, but that would only be for the satisfaction of technological narcissists and other geek-nerds, and we’d definitely lose the average user… and maybe the not-so-average one too.

To summarize: we take a specific period, take into account all the tests of all the best test labs (on all the main vendors), and don’t miss a thing (like poor results in this or that test) – and that goes for KL of course too.

All righty. Theory over. Now let’s apply that methodology to the real world; specifically – the real world in 2014.

First, a few tech details and disclaimers for those of the geeky-nerdy persuasion:

- Considered in 2014 were the comparative studies of eight independent testing labs (with: years of experience, the requisite technological set-up (I saw some for myself), outstanding industry coverage – both of the vendors and of the different protective technologies, and full membership of AMTSO) : AV-Comparatives, AV-Test, Anti-malware, Dennis Technology Labs, MRG EFFITAS, NSS Labs, PC Security Labs and Virus Bulletin. A detailed explanation of the methodology – in this video and in this document.

- Only vendors taking part in 35% or more of the labs’ tests were taken into account. Otherwise it would be possible to get a ‘winner’ that did well in just a few tests, but which wouldn’t have done well consistently over many tests – if it had taken part in them (so here’s where we filter out the faux-test marketing).

Soooo… analyzing the results of the tests in 2014, we get……..

….Drums roll….

….mouths are cupped….

….breath is bated….

……..we get this!:

Read on: Are all washing powder brands the same?…