In recent years there’s been all sorts written about us in the U.S. press, and the article last Thursday in the Wall Street Journal at first seemed to be just more of the same: the latest in a long line of conspiratorial smear-articles. Here’s why it seemed so: according to anonymous sources, a few years ago Russian government-backed hackers, allegedly, with the help of a hack into the product of Your Humble Servant, stole from the home computer of an NSA employee secret documentation. Btw: our formal response to this story is here.

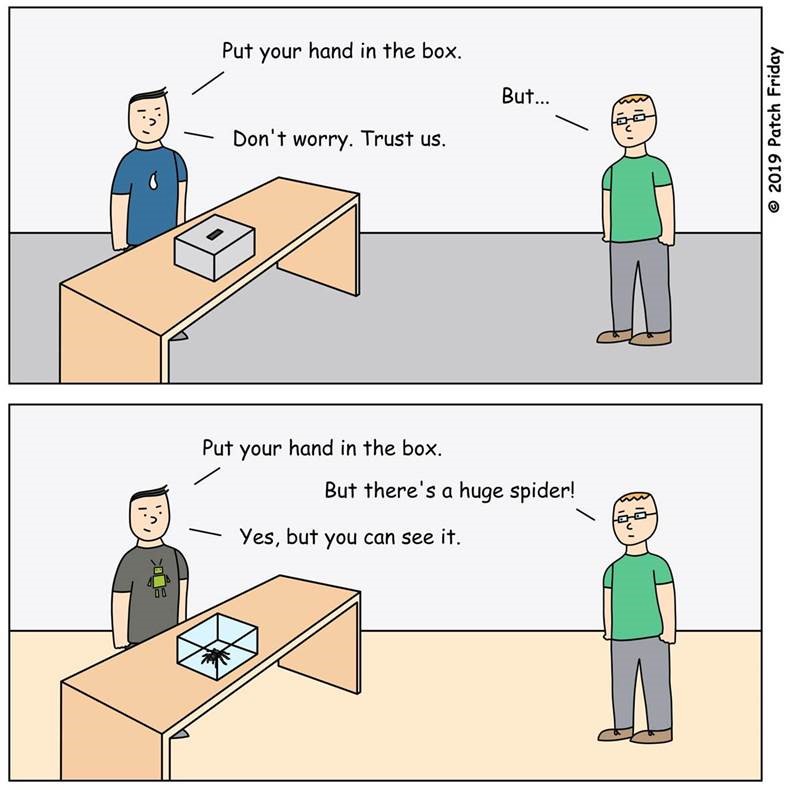

However, if you strip the article of the content regarding alleged Kremlin-backed hackers, there emerges an outline to a very different – believable – possible scenario, one in which, as the article itself points out, we are ‘aggressive in [our] methods of fighting malware’.

Ok, let’s go over the article again…

In 2015 a certain NSA employee – a developer working on the U.S. cyber-espionage program – decided to work from home for a bit and so copied some secret documentation onto his (her?) home computer, probably via a USB stick. Now, on that home computer he’d – quite rightly and understandably – installed the best antivirus in the world, and – also quite rightly – had our cloud-based KSN activated. Thus the scene was set, and he continued his daily travails on state-backed malware in the comfort of his own home.

Let’s go over that just once more…

So, a spy-software developer was working at home on same spy-software, having all the instrumentation and documentation he needed for such a task, and protecting himself from the world’s computer maliciousness with our cloud-connected product.

Now, what could have happened next? This is what:

Malware could have been detected as suspicious by the AV and sent to the cloud for analysis. For this is the standard process for processing any newly-found malware – and by ‘standard’ I mean standard across the industry; all our competitors use a similar logic in this or that form. And experience shows it’s a very effective method for fighting cyberthreats (that’s why everyone uses it).

So what happens with the data that gets sent to the cloud? In ~99.99% of cases, analysis of the suspicious objects is done by our machine learning technologies, and if they’re malware, they’re added to our malware detection database (and also to our archive), and the rest goes in the bin. The other ~0.1% of data is sent for manual processing by our virus analysts, who analyze it and make their verdicts as to whether it’s malware or not.

Ok – I hope that part’s all clear.

Next: What about the possibility of hack into our products by Russian-government-backed hackers?

Theoretically such a hack is possible (program code is written by humans, and humans will make mistakes), but I put the probability of an actual hack at zero. Here’s one example as to why:

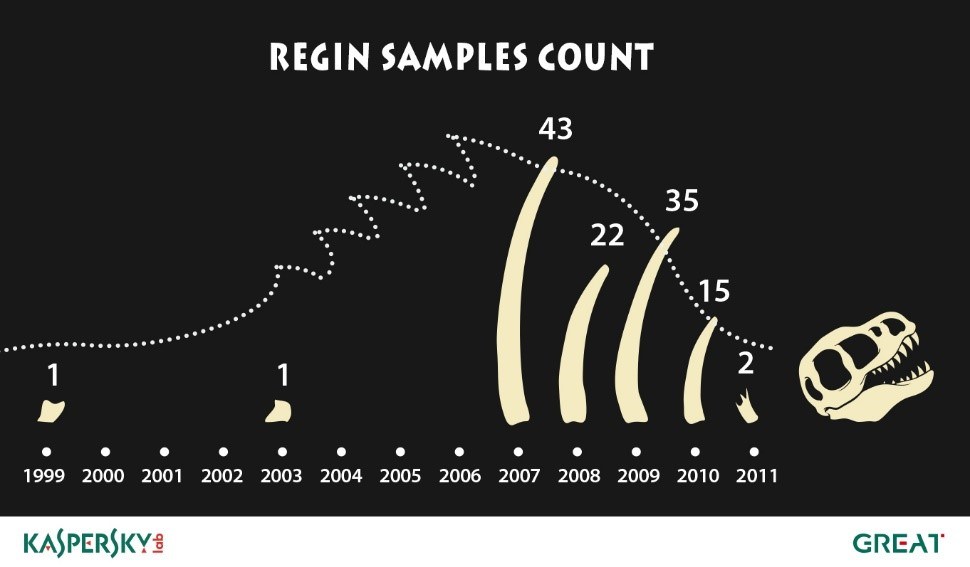

In the same year as what the WSJ describes occurred, we discovered on our own network an attack by an unknown seemingly state-sponsored actor – Duqu2. Consequently we conducted a painstakingly detailed audit of our source code, updates and other technologies, and found… – no signs whatsoever of any third-party breach of any of it. So as you can see, we take any reports about possible vulnerabilities in our products very seriously. And this new report about possible vulnerabilities is no exception, which is why we’ll be conducting another deep audit very soon.

The takeaway:

If the story about our product’s uncovering of government-grade malware on an NSA employee’s home computer is real, then that, ladies and gents, is something to be proud of. Proactively detecting previously unknown highly-sophisticated malware is a real achievement. And it’s the best proof there is of the excellence of our technologies, plus confirmation of our mission: to protect against any cyberthreat no matter where it may come from or its objective.

So, like I say… here’s to aggressive detection of malware. Cheers!