September 26, 2016

Laziness, Cybersecurity, and Machine Learning.

It’s just the way it is: the human being is a lazy creature. If it’s possible not to do something, we don’t do it. However, paradoxically this is a good thing, because laziness is… the engine of progress! What? How so? Well, if a job’s considered too hard or long-winded or complex for humans to do, certain lazy (but conscientious) humans (Homo Laziens?: ) give the job to a machine! In cybersecurity we call it optimization.

Analysis of millions of malicious files and websites every day, developing ‘inoculations’ against future threats, forever improving proactive protection, and solving dozens of other critical tasks – all of that is simply impossible without the use of automation. And machine learning is one of the main concepts used in automation.

Automation has existed in cybersecurity right from the beginning (of cybersecurity itself). I remember, for example, how back in the early 2000s I wrote the code for a robot to analyze incoming malware samples: the robot put the detected files into the corresponding folder of our growing malware collection based on its (the robot’s) verdict regarding its (the file’s!) characteristics. It was hard to imagine – even back then – that I used to do all that manually!

These days however, simply giving robots precise instructions for tasks you want them to do isn’t enough. Instead, instructions for tasks need to be given imprecisely. Yes, really!

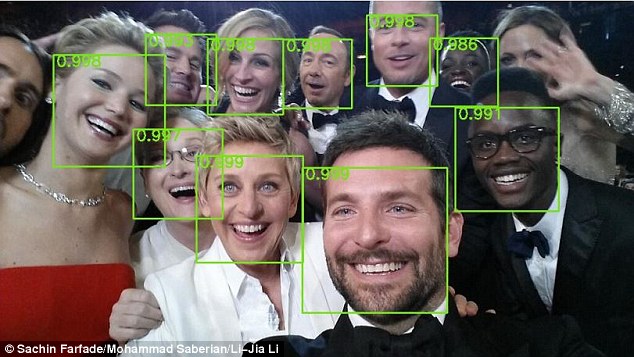

For example, ‘Find the human faces on this photograph’. For this you don’t describe how human faces are picked out and how human faces differ from those of dogs. Instead what you do is show the robot several photographs and add: ‘These things here are humans, this is a human face, and these here are dogs; now work the rest out yourself’! And that, in a nutshell, is the ‘freedom of creativity’ that calls itself machine learning.

ML + CS = Love

Without machine learning (ML), not a single cybersecurity vendor would have survived into this decade (that is, unless detection is simply copied from others). However, some startups present ML as representing a whole now revolution in cybersecurity (CS) – a revolution that, er, they ‘initiated and are spearheading’. But in actual fact ML has been applied in cybersecurity for more than a decade – only without marketing fanfare.

Machine learning is a discipline about which thousands of PhD dissertations and scholarly textbooks have been written, so that’s hardly going to fit into a single blog post. Even several blog posts. But anyway, do you, dear reader, really need all the academic technical detail? Of course not. So, instead, I’ll be telling you something of much more use – and which will fit into this here blog post: the tastiest, juiciest, most important and most breathtaking elements of this thing called machine learning – from our (KL’s) perspective.

At the start of the ‘journey’ we used various mathematical models of automation only for internal needs; for example, for automation of a malware analyst’s workstation (to pick out the most important bits from the flow of information), for clusterization (grouping objects as per attributes), and for optimization of web crawlers (determining the priority and intensity of crawling through millions of URLs based on weightings of different factors).

Later on it became clear that without introducing such smart technologies into our products, the flow of cyber-gunk would overwhelm us. What we needed were robots that could quickly and accurately answer certain complex questions, like: ‘Hey robot, show me the most suspicious files, as determined by you based on these examples’. Or: ‘Yo, robot. Look – this is what really cool heuristic procedures are like – ones that detect tens of thousands of objects. What you need to do is find common characteristics in other malicious samples, then implement those same procedures yourself – only on different objects’.

But hold on…

Before you go thinking this is all a cakewalk: When applying methods of machine learning to real tasks, a great many difficulties and nuances turn up. Especially in cybersecurity: the cyber-underground constantly invents new types of attacks, so no matter how good a mathematical model is, it needs to be constantly improved. And this is a key difficulty characteristic of machine learning in cybersecurity: we work in a dynamic, harsh environment in which machine learning comes up against constant counteraction directed right at it.

First, initially all these new attacks need to be found. Of course, the cyberswine hardly send us their wares for our detecting pleasure… just the opposite: they try very hard to hide them to stay under the radar for as long as possible so they can earn more criminal income for longer. The ongoing search for such attacks is expensive expert work with the use of highly complex instruments and intelligence.

Second, the analyst needs to train a robot to correctly work out what’s important and what isn’t. And this is reeeaaally tough, with all sorts of proverbial rakes scattered across the lawn just waiting to be stepped on. Just one example: the problem of overfitting.

A classic example of overfitting is as follows: mathematicians created a model for recognizing images of cows using many pictures of the animal. And, yep, the model started to recognize cows! But once they started to complicate the picture, the model recognized… nothing. So they had a look to see what was up. What they discovered was that the algorithm had become ‘too clever’ (kinda), and started deceiving itself: – it trained itself on the green field in the pictures upon which the cows grazed!

So, adding ‘brains’ to technologies is really challenging; it’s a long, hard road of trial and error demanding a combination of at least two types of expertise – that in data science and that in cybersecurity. By the mid-2000s we’d accumulated plenty of both, and started to include machine learning in our ‘combat’ technologies in products.

Ever since, automation in our products has advanced in leaps and bounds. Different mathematical approaches were introduced in products and components both large and small right across the spectrum: in anti-spam (classification of emails based on the degree of their spamness); anti-phishing (heuristic recognition of phishing sites); in parental control (picking out undesirable content); in anti-fraud; in protection against targeted attacks; in activity monitoring and more.

Not so quickly, Mr. Smith

After reading all about the successes of machine learning, there could arise the temptation to put such a smart machine algorithm directly into the computer of a customer and leave it be: since the algorithm is smart, let it learn. However, in the machine learning game there’s no place for a lone ranger. Here’s why:

First, such an approach is limited in terms of performance. The user needs a sensible balance between quality of protection and speed, and the development of existing technologies and addition of new ones – no matter how smart – will inevitably use up precious system resources.

Second, such ‘isolationism’ – no updates, no new study material – inevitably lowers the quality of protection. The algorithm needs to be taught regularly about completely new types of cyberattacks; otherwise its detection abilities become outdated quicker than you can say ‘the threat landscape is always changing’.

Third, a concentration of all ‘combat’ technologies on a computer gives the cyber-scum greater possibilities for studying the finer details of the protection and to then develop methods to counter it.

Those are the top-three reasons, but there are many more.

So what needs to be done?

It’s rather straightforward really: put all the big guns – the heavy-duty and most resource-intensive technologies of machine learning – somewhere a lot more sophisticated than users’ computers! To create a ‘remote brain’, which, based on data studied from millions of client computers, is able to quickly and accurately recognize an attack and deliver the required protection…

Little fluffy clouds

So, 10 years ago we created KSN (17 patents and patent applications) – the required ‘remote brain’. KSN is a cloud technology with advanced infrastructure connected to every protected computer, which uses hardly any endpoint resources and increases the quality of protection.

In essence, KSN is a Russian doll: the ‘cloud’ contains a lot of other smart technologies to fight cyberattacks. It also features ‘combat’ systems and constantly developing experimental models. I’ve already written about one of these – Astraea (patents US7640589, US8572740, US7743419), which since 2009 has been automatically analyzing events on protected computers to uncover unknown threats. Today Astraea processes in a day more than a billion events, in doing so calculating ratings for tens of millions of objects.

Though cloud technologies have proven how superior they are, all the same there are autonomous, isolated systems being sold. These have done a spot of preliminary machine learning and rarely update since they’re located on the client computer. Go figure.

But wait.

The companies that produce these isolated solutions say that, thanks to machine learning, they can detect the ‘new generation of malware’ without regular updates. But that detection interests no one, as it protects insignificant ‘surfaces’ which promise insufficient economic gain to the bad guys (too few users = not interested). Sometimes maybe they detect this or that, but you’d never hear about it. It’s not as if they’ve ever uncovered anything mega – like advanced spy attacks such as Duqu, Flame or Equation.

Today we analyze 99.9% of the cyberthreats using our infrastructural algorithms powered by machine learning. The time gap between uncovering suspicious behavior on a protected device and the issue of the respective new ‘tablet’ lasts on average 10 minutes. That is, of course, if we haven’t already caught the offending item with proactive protection (for example, automatic protection against exploits). There have been instances when, from the moment of finding a suspicious object to the issue of an update just 40 seconds passed. This led to much grumbling on underground forums: ‘Just how do these guys detect us so darn quickly? We just can’t keep ahead!’.

To summarize: cloud infrastructure + machine learning = OMG-effective protection. Even before, we rarely didn’t do well in independent tests; but since the KSN – we’ve become the undisputed No. 1. Moreover, we’ve maintained very low levels of false positives, and on speed we’ve among the best indicators in the industry.

Vintage Wine vs. Last Year’s Plonk

It seemed as if, here at last – a panacea was found against all cyber-bad! A lightweight client-interceptor on the endpoint, and all the heavy-duty work done in the cloud. But no. For if ever there’d be network problems, the endpoint would be unprotected. Indeed, practice has shown that the ideal environment for where smart technologies reside is between the two extremes – adopting a combination of autonomous and cloud landscapes.

Then there was the idea that machine learning could take the place of all other approaches to the total security paradigm. Aka, putting all one’s eggs into just one basket.

But… what will happen when the bad guys eventually understand how the algorithm works and learn how to get around the protection? That would mean all the mathematical models would need to be adjusted and an update issued for the protected device. And while the model is being adjusted and the update sent/received, the user remains all on his lonesome faced with a cyberattack – with no protection.

The conclusion is obvious: the best protection is a combination of different technologies, at all layers, taking into account all attack vectors.

And finally, the most important thing: machine learning is created by humans – experts of the very highest caliber in analysis of data and cyber-dangers. You can’t have one without the other. It’s all about humachine intelligence.

And it’s a long process of trial and error that takes many years. It’s like a vintage wine – it’ll always be better than last year’s plonk, no matter how pretty the plonk’s label. Who set out on the long and winding road of machine leaning the earliest – he’ll have the more experienced experts, better technologies, and more reliable protection. And that’s not just my opinion. It’s confirmed by tests, investigations/research and customers.

Bonus Track: O tempora o mores!

The business model of some IT security startups is clear: they go with ‘It doesn’t matter how much you earn, it’s how much you’re worth‘. Their aim is a flurry of intense marketing activity based on provocations, manipulations and faking – all to blow up bubbles of expectations.

If a startup isn’t based on trickery, it’ll surely realize that without multi-layered protection, without the application of all modern protective technologies, and without the development of its own expertise – it’s doomed; because investment money and the ‘credit of trust’ of users are coming to an end.

On the other hand, to make a good security product from nothing which ticks all the boxes is difficult, if not impossible these days. For its not so much money you need today as brains and time. Still, I guess some startups chose to start small and build up steadily.

I’m sure that sooner or later ‘revolutionary’ startups will start introducing properly tested and time-proven technologies that demonstrate their effectiveness. And the best of the young startups, which build up genuine experience and accumulate their own expertise will start to expand their protection arsenal to increasingly close off potential attack scenarios. Gradually getting their products up to a professional level, they’ll demonstrate the correlation to objective criteria of quality adopted in true cybersecurity.

#MachineLearning is fundamental to #cybersecurity. @e_kaspersky gives some interesting facts about it #ai_oilTweet