Just in case you missed the first two, this is the third episode of my cyber-yesteryear chronicles. Since I’m in lockdown like most folks, I have more time on my hands to be able to have a leisurely mosie down cyberseKurity memory lane. Normally I’d be on planes jetting here, there and everywhere for business and tourisms – all of which normally takes up most of my time. But since none of that – at least offline/in person – is possible at the moment, I’m using a part of that unused time instead to put fingers to keyboard for a steady stream of personal / Kaspersky Lab / cyber-historical nostalgia: in this post – from the early to mid-nineties.

Typo becomes a brand

In the very beginning, all our antivirus utilities were named following the ‘-*.EXE’ template. That is, for example, ‘-V.EXE’ (antivirus scanner), ‘-D.EXE’ (resident monitor), ‘-U.EXE’ (utilities). The ‘-‘ prefix was used to make sure that our programs would be at the very top of a list of programs in a file manager (tech-geekiness meets smart PR moves from the get go?:).

Later, when we released our first full-fledged product, it was named ‘Antiviral Toolkit Pro’. Logically, that should have been abbreviated to ‘ATP’; but it wasn’t…

Somewhere around the end of 1993 or the beginning of 1994, Vesselin Bontchev, who’d remembered me from previous meet-ups (see Cyber-yesteryear – pt. 1), asked me for a copy of our product for testing at the Virus Test Center of Hamburg University, where he worked at the time. Of course, I obliged, and while zip-archiving the files I accidentally named the archive AVP.ZIP (instead of ATP.ZIP), and off I sent it to Vesselin unawares. Some time later Vesselin asked me for permission to put the archive onto an FTP server (so it would be publically available), to which I obliged again. A week or two later he told me: ‘Your AVP is becoming really rather popular on the FTP!’

‘What AVP?’, I asked.

‘What do you mean ‘What AVP’? The one you sent me in the archive file, of course!’

‘WHAT?! Rename it right away – that’s a mistake!’

‘Too late. It’s already out there – and known as AVP!’

And that was that: AVP we were stuck with! Mercifully, we (kinda) got away with it – Anti-Viral toolkit Pro. Like I say – kinda ). Still, in for a penny, in for a pound: all our utilities were renamed by dropping the ‘-‘ prefix and putting ‘AVP’ in its place – and it’s still used today in some of the names of our modules.

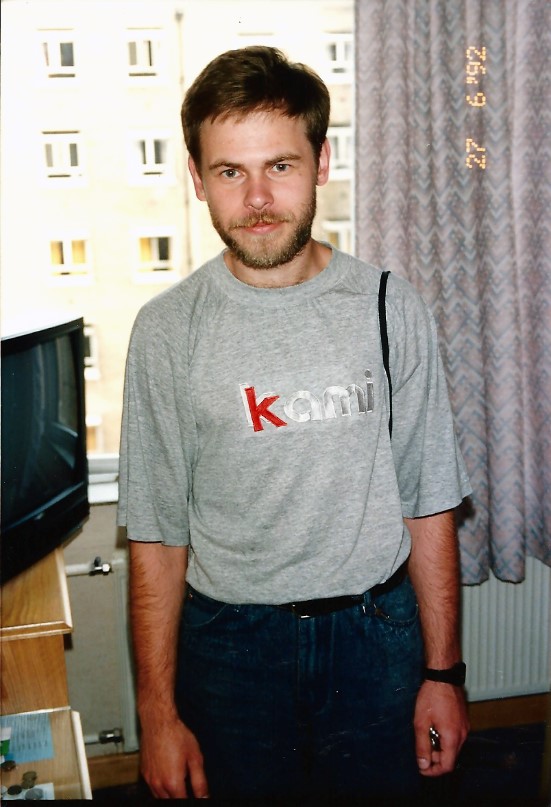

First business trips – to Germany for CeBIT

In 1992, Alexey Remizov – my boss at KAMI, where I first worked – helped me in getting my first foreign-travel passport, and took me with him to the CeBIT exhibition in Hannover in Germany. We had a modest stand there, shared with a few other Russian companies. Our table was half-covered with KAMI transputer tech, the other half – our antivirus offerings. We were rewarded with a tiny bit of new business, but nothing great. All the same, it was a very useful trip…

Our impressions of CeBIT back then were of the oh-my-grandiose flavor. It was just so huge! And it wasn’t all that long since Germany was reunified, so, to us, it was all a bit West Germany – computer-capitalism gone bonkers! Indeed – a cultural shock (followed up by a second cultural shock when we arrived back in Moscow – more on that later).

Given the enormity of CeBIT, our small, shared stand was hardly taken any notice of. Still, it was the proverbial ‘foot in the door’ or ‘the first step is the hardest’ or some such. For it was followed up by a repeat visit to CeBIT four years later – that time to start building our European (and then global) partner network. But that’s a topic for another day post (which I think should be interesting especially for folks beginning their own long business journeys).

Btw, even as far back as then, I understood our project was badly in need of at least some kind of PR/marketing support. But since we had, like, hardly two rubles to rub together, plus the fact that journalists had never heard of us, it was tricky getting any. Still, as a direct result of our first trip to CeBIT, we managed to get a self-written piece all about us into the Russian technology magazine ComputerPress in May 1992: home-grown PR!

Fee-fi-fo-fum, I smell the dollars of Englishmen!

My second business trip was in June-July of the same year – to the UK. One result of this trip was another article, this time in Virus Bulletin, entitled The Russians Are Coming, which was our first foreign publication. Btw – in the article ’18 programmers’ are mentioned. There were probably 18 folks working at KAMI overall, but in our AV department there were just the three of us.

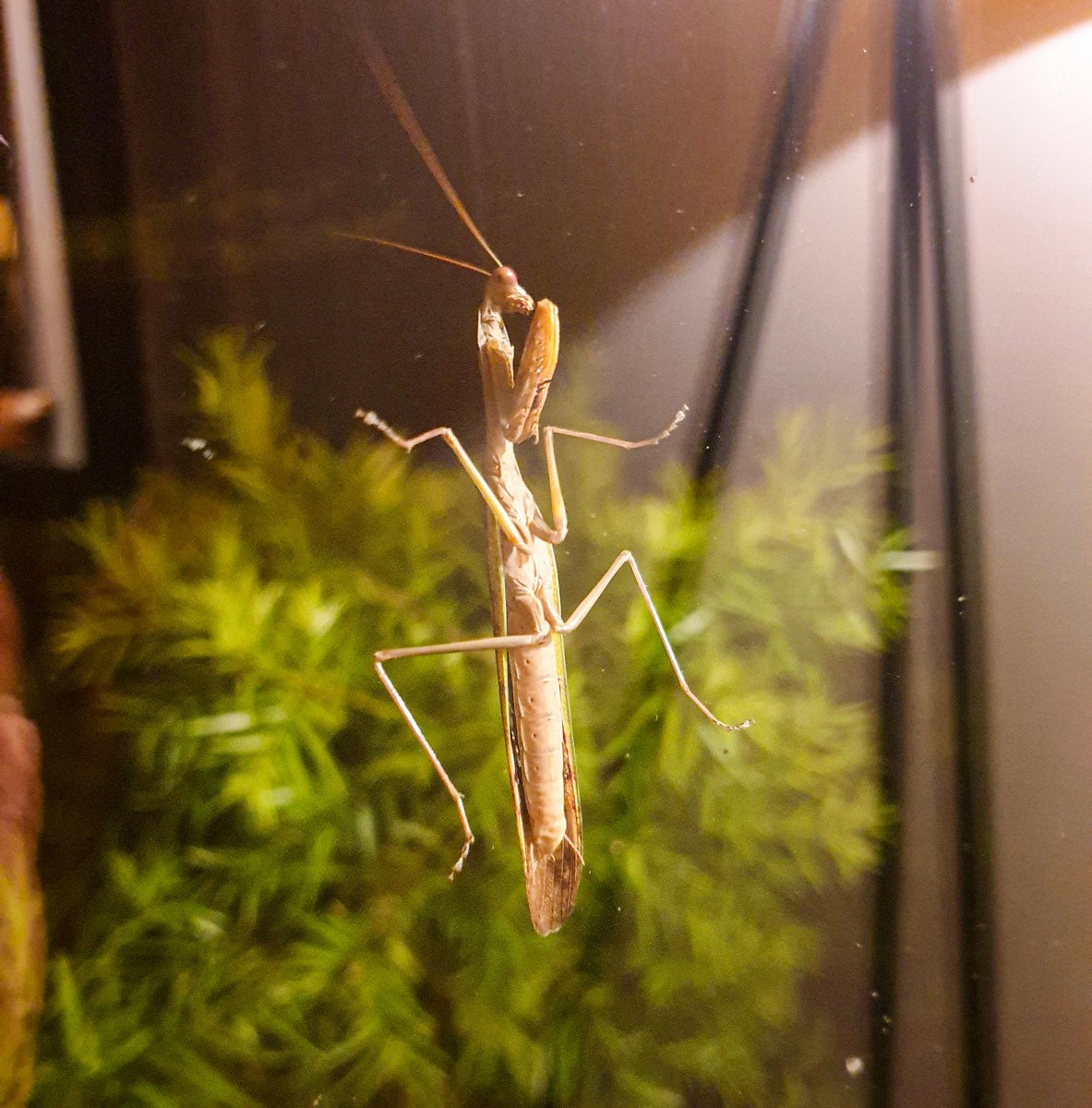

London, June 1992

London, June 1992

Read on…