Last spring (2015), we discovered Duqu 2.0 – a highly professional, very expensive, cyber-espionage operation. Probably state-sponsored. We identified it when we were testing the beta-version of the Kaspersky Anti Targeted Attack (KATA) platform – our solution that defends against sophisticated targeted attacks just like Duqu 2.0.

And now, a year later, I can proudly proclaim: hurray!! The product is now officially released and fully battle ready!

But first, let me now go back in time a bit to tell you about why things have come to this – why we’re now stuck with state-backed cyber-spying and why we had to come up with some very specific protection against it.

(While for those who’d prefer to go straight to the beef in this here post – click here.)

‘The good old days’ – words so often uttered as if bad things just never happened in the past. The music was better, society was fairer, the streets were safer, the beer had a better head, and on and on and on. Sometimes, however, things really were better; one example being how relatively easy it was to fight cyber-pests in years past.

Of course, back then I didn’t think so. We were working 25 hours a day, eight days a week, all the time cursing the virus writers and their phenomenal reproduction rate. Each month (and sometimes more often) there were global worm epidemics and we were always thinking that things couldn’t get much worse. How wrong we were…

At the start of this century viruses were written mainly by students and cyber-hooligans. They’d neither the intention nor the ability to create anything really serious, so the epidemics they were responsible for were snuffed out within days – often using proactive methods. They simply didn’t have any motivation for coming up with anything more ominous; they were doing it just for kicks when they’d get bored of Doom and Duke Nukem :).

The mid-2000s saw big money hit the Internet, plus new technologies that connected everything from power plants to mp3 players. Professional cybercriminal groups also entered the stage seeking the big bucks the Internet could provide, while cyber-intelligence-services-cum-armies were attracted to it by the technological possibilities if offered. These groups had the motivation, means and know-how to create reeeaaaally complex malware and conduct reeeaaaally sophisticated attacks while remaining under the radar.

Around about this time… ‘antivirus died’: traditional methods of protection could no longer maintain sufficient levels of security. Then a cyber-arms race began – a modern take on the eternal model of power based on violence – either attacking using it or defending against its use. Cyberattacks became more selective/pinpointed in terms of targets chosen, more stealthy, and a lot more advanced.

In the meantime ‘basic’ AV (which by then was far from just AV) had evolved into complex, multi-component systems of multi-level protection, crammed full of all sorts of different protective technologies, while advanced corporate security systems had built up yet more formidable arsenals for controlling perimeters and detecting intrusions.

However, that approach, no matter how impressive on the face of it, had one small but critical drawback for large corporations: it did little to proactively detect the most professional targeted attacks – those that use unique malware using specific social engineering and zero-days. Malware that can stay unnoticed to security technologies.

I’m talking attacks carefully planned months if not years in advance by top experts backed by bottomless budgets and sometimes state financial support. Attacks like these can sometimes stay under the radar for many years; for example, the Equation operation we uncovered in 2014 had roots going back as far as 1996!

Banks, governments, critical infrastructure, manufacturing – tens of thousands of large organizations in various fields and with different forms of ownership (basically the basis of today’s world economy and order) – all of it turns out to be vulnerable to these super professional threats. And the demand for targets’ data, money and intellectual property is high and continually rising.

So what’s to be done? Just accept these modern day super threats as an inevitable part of modern life? Give up the fight against these targeted attacks?

No way.

Anything that can be attacked – no matter how sophisticatedly – can be protected to a great degree if you put serious time and effort and brains into that protection. There’ll never be 100% absolute protection, but there is such a thing as maximal protection, which makes attacks economically unfeasible to carry out: barriers so formidable that the aggressors decide to give up putting vast resources into getting through them, and instead go off and find some lesser protected victims. Of course there’ll be exceptions, especially when politically motivated attacks against certain victims are on the agenda; such attacks will be doggedly seen through to the end – a victorious end for the attacker; but that’s no reason to quit putting up a fight.

All righty. Historical context lesson over, now to that earlier mentioned sirloin…

…Just what the doctor ordered against advanced targeted attacks – our new Kaspersky Anti Targeted Attack platform (KATA).

So what exactly is this KATA, how does it work, and how much does it cost?

First, a bit on the anatomy of a targeted attack…

A targeted attack is always exclusive: tailor-made for a specific organization or individual.

The baddies behind a targeted attack start out by scrupulously gathering information on the targets right down to the most minor of details – for the success of an attack depends on the completeness of such a ‘dossier’ almost as much as the budget of the operation. All the targeted individuals are spied on and analyzed: their lifestyles, families, hobbies, and so on. How the corporate network is constructed is also studied carefully. And on the basis of all the information collected an attack strategy is selected.

Next, (i) the network is penetrated and remote (& undetected) access with maximum privileges is obtained. After that, (ii) the critical infrastructure nodes are compromised. And finally, (iii) ‘bombs away!’: the pilfering or destruction of data, the disruption of business processes, or whatever else might be the objective of the attack, plus the equally important covering one’s tracks so no one knows who’s responsible.

The motivation, the duration of the various prep-and-execution stages, the attack vectors, the penetration technologies, and the malware itself – all of it is very individual. But not matter how exclusive an attack gets, it will always have an Achilles’ heel. For an attack will always cause at least a few tiny noticeable happenings (network activity, certain behavior of files and other objects, etc.), anomalies being thrown up, and abnormal network activity. So seeing the bird’s-eye view big picture – in fact the whole picture formed from different sources around the network – makes it possible to detect a break-in.

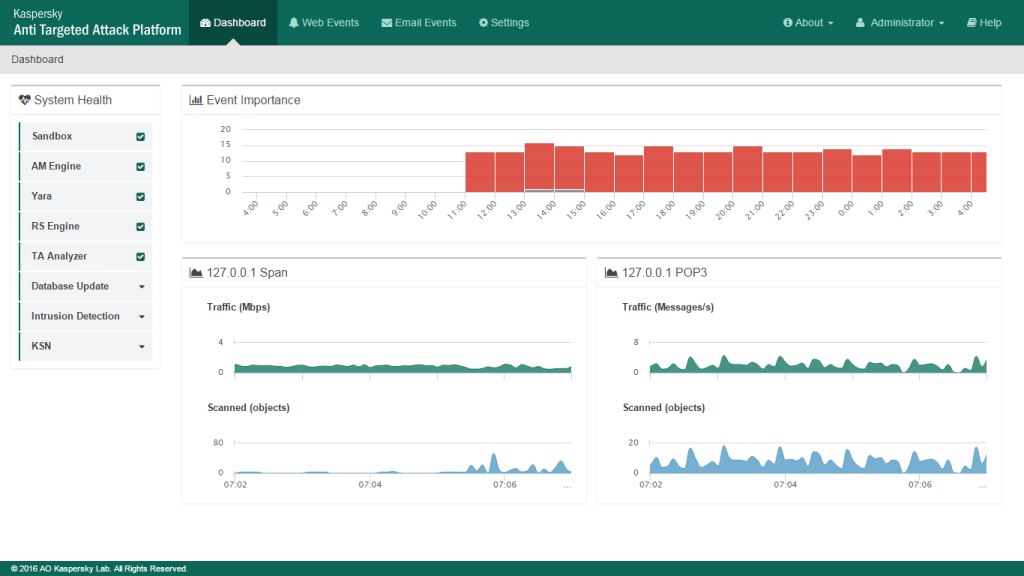

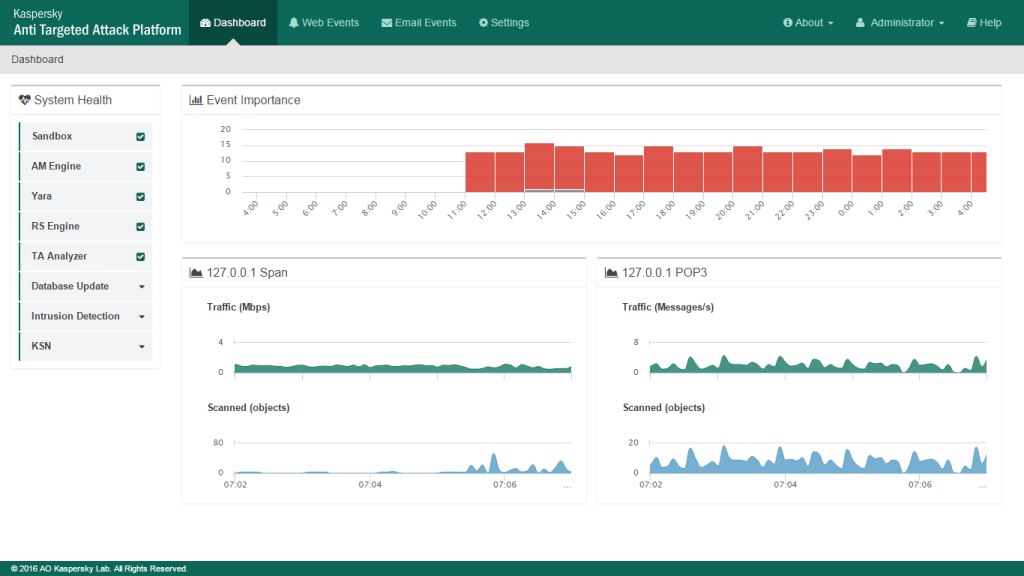

To collect all the data about such anomalies and the creation of the big picture, KATA uses sensors – special ‘e-agents’ – which continuously analyze IP/web/email traffic plus events on workstations and servers.

For example, we intercept IP traffic (HTTP(s), FTP, DNS) using TAP/SPAN; the web sensor integrates with the proxy servers via ICAP; and the mail sensor is attached to the email servers via POP3(S). The agents are real lightweight (for Windows – around 15 megabytes), are compatible with other security software, and make hardly any impact at all on either network or endpoint resources.

All collected data (objects and metadata) are then transferred to the Analysis Center for processing using various methods (sandbox, AV scanning and adjustable YARA rules, checking file and URL reputations, vulnerability scanning, etc.) and archiving. It’s also possible to plug the system into our KSN cloud, or to keep things internal – with an internal copy of KpSN for better compliance.

Once the big picture is assembled, it’s time for the next stage! KATA reveals suspicious activity and can inform the admins and SIEM (Splunk, Qradar, ArcSight) about any unpleasantness detected. Even better – the longer the system works and the more data accumulates about the network, the more effective it is, since atypical behavior becomes easier to spot.

More details on how KATA works… here.

Ah yes; nearly forgot… how much does all this cost?

Well, there’s no simple answer to that one. The price of the service depends on dozens of factors, including the size and topology of the corporate network, how the solution is configured, and how many accompanying services are used. One thing is clear though: the cost pales into insignificance if compared with the potential damage it prevents.