September 2, 2020

Cybersecurity – the new dimension of automotive quality.

Quite a lot of folks seem to think that the automobile of the 21st century is a mechanical device. Sure, it has added electronics for this and that, some more than others, but still, at the end of the day – it’s a work of mechanical engineering: chassis, engine, wheels, steering wheel, pedals… The electronics – ‘computers’ even – merely help all the mechanical stuff out. They must do – after all, dashboards these days are a sea of digital displays, with hardly any analog dials to be seen at all.

Well, let me tell you straight: it ain’t so!

A car today is basically a specialized computer – a ‘cyber-brain’, controlling the mechanics-and-electrics we traditionally associate with the word ‘car’ – the engine, the brakes, the turn indicators, the windscreen wipers, the air conditioner, and in fact everything else.

In the past, for example, the handbrake was 100% mechanical. You’d wrench it up – with your ‘hand’ (imagine?!), and it would make a kind of grating noise as you did. Today you press a button. 0% mechanics. 100% computer controlled. And it’s like that with almost everything.

Now, most folks think that a driver-less car is a computer that drives the car. But if there’s a human behind the wheel of a new car today, then it’s the human doing the driving (not a computer), ‘of course, silly!’

Here I go again…: that ain’t so either!

With most modern cars today, the only difference between those that drive themselves and those that are driven by a human is that in the latter case the human controls the onboard computers. While in the former – the computers all over the car are controlled by another, main, central, very smart computer, developed by companies like Google, Yandex, Baidu and Cognitive Technologies. This computer is given the destination, it observes all that’s going on around it, and then decides how to navigate its way to the destination, at what speed, by which route, and so on based on mega-smart algorithms, updated by the nano-second.

A short history of the digitalization of motor vehicles

So when did this move from mechanics to digital start?

Some experts in the field reckon the computerization of the auto industry began in 1955 – when Chrysler started offering a transistor radio as an optional extra on one of its models. Others, perhaps thinking that a radio isn’t really an automotive feature, reckon it was the introduction of electronic ignition, ABS, or electronic engine-control systems that ushered in automobile-computerization (by Pontiac, Chrysler and GM in 1963, 1971 and 1979, respectively).

No matter when it started, what followed was for sure more of the same: more electronics; then things started becoming more digital – and the line between the two is blurry. But I consider the start of the digital revolution in automotive technologies as February 1986, when, at the Society of Automotive Engineers convention, the company Robert Bosch GmbH presented to the world its digital network protocol for communication among the electronic components of a car – CAN (controller area network). And you have to give those Bosch guys their due: still today this protocol is fully relevant – used in practically every vehicle the world over!

// Quick nerdy post-CAN-introduction digi-automoto backgrounder:

The Bosch boys gave us various types of CAN buses (low-speed, high-speed, FD-CAN), while today there’s FlexRay (transmission), LIN (low-speed bus), optical MOST (multimedia), and finally, on-board Ethernet (today – 100mbps; in the future – up to 1gbps). When cars are designed these days various communications protocols are applied. There’s drive by wire (electrical systems instead of mechanical linkages), which has brought us: electronic gas pedals, electronic brake pedals (used by Toyota, Ford and GM in their hybrid and electro-mobiles since 1998), electronic handbrakes, electronic gearboxes, and electronic steering (first used by Infinity in its Q50 in 2014).

BMW buses and interfaces

BMW buses and interfaces

![YOU CAN NEVER GET TOO MANY AWARDS. SEE 1ST COMMENT FOR ENGLISH ⏩

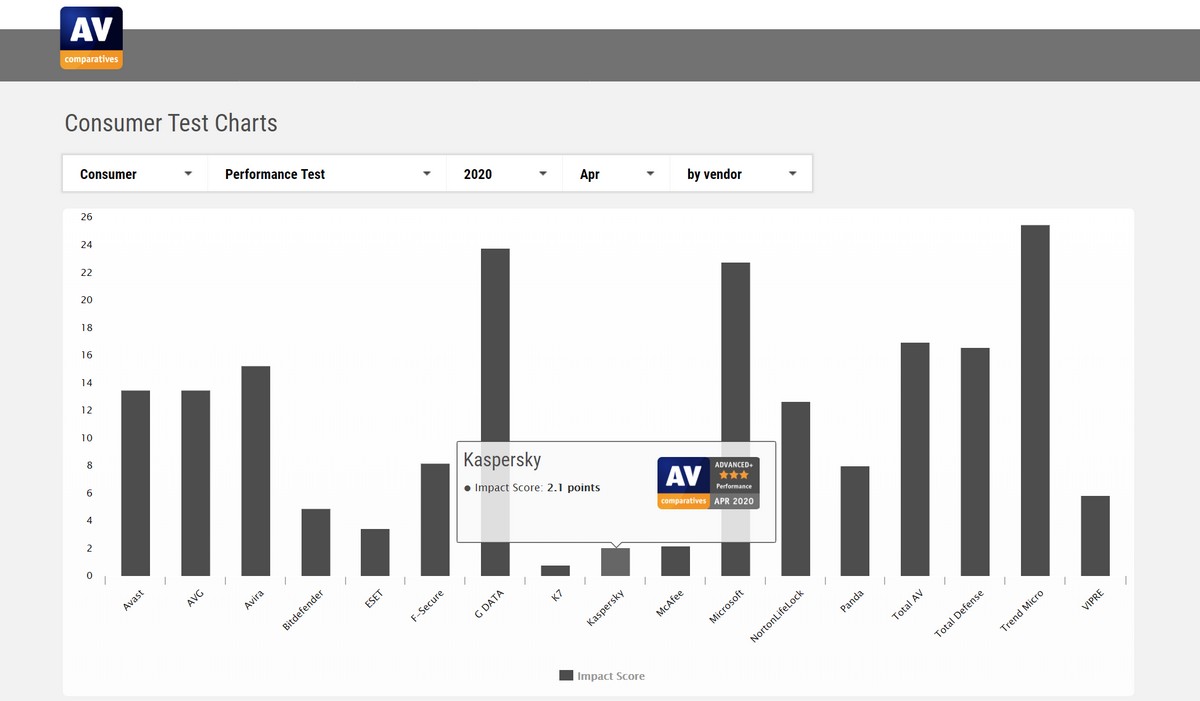

"А из нашего окна страна Австрия видна!" - практически (с). Но в этих австриях я был не смотреть из окна, а по многочисленным деловым делам, первое из которых - лично получить несколько важных наград и множество сертификатов от независимой тестовой лаборатории AV-Comparatives.

Это далеко не первая наша награда. Скажу больше - на протяжении последних десяти лет по результатам независимых тестов к нам даже близко ни один конкурент не подобрался. Но почему тогда такое внимание конкретно к этой победе? Ответ простой: густопопсовый геополитизм. В наше весьма геополитически [очень мягко говоря] непростое время... Ну, если отбросить все казённые слова, то будет, как в известном анекдоте про поручика Ржевского. В той самой истории, когда ему указали повторить свою фразу без матерщины. На что тот ответил: "Ну, в таком случае я просто молчал".

Так вот, в наше "поручико-ржевско-молчаливое время" участвовать и получить первые места в европейских тестах - это за пределами научной и ненаучной фантастики. Что в целом совпадает с одной из основных парадигм моей жизни: "Мы делаем невозможное. Возможное сделают и без нас" (с). Большими трудами и непомерными усилиями - да! Это можно! Мы заделали такие продукты, такие технологии, такую компанию - что даже в непростое время нас и в Европах знают, уважают, любят и пользуются. Ура!](https://scontent-iad3-2.cdninstagram.com/v/t51.29350-15/430076034_1096357205018744_692310533755868388_n.heic?stp=dst-jpg&_nc_cat=103&ccb=1-7&_nc_sid=18de74&_nc_ohc=XLII-tX29aoAX80SM4u&_nc_ht=scontent-iad3-2.cdninstagram.com&edm=ANo9K5cEAAAA&oh=00_AfBINCtkZ3-r_aTvdSC36JELI05V6PuBnMWs672PK3GsBQ&oe=65E63D48)