October 13, 2021

Ransomware: how we’re making our protection against it even better.

Being a developer of cybersecurity: it’s a tough job, but someone’s got to do it (well!).

Our products seek and destroy malware, block hacker attacks, do update management, shut down obtrusive ad banners, protect privacy, and a TONS more… and it all happens in the background (so as not to bother you) and at a furious pace. For example, KIS can check thousands of objects either on your computer or smartphone in just one second, while your device’s resource usage is near zero: we’ve even set the speedrunning world record playing the latest Doom with KIS working away in the background!

Keeping things running so effectively and at such a furious pace has, and still does require the work of hundreds of developers, and has seen thousands of human-years invested in R&D. Just a millisecond of delay here or there lowers the overall performance of a computer in the end. But at the same time we need to be as thorough as possible so as not to let a single cyber-germ get through ).

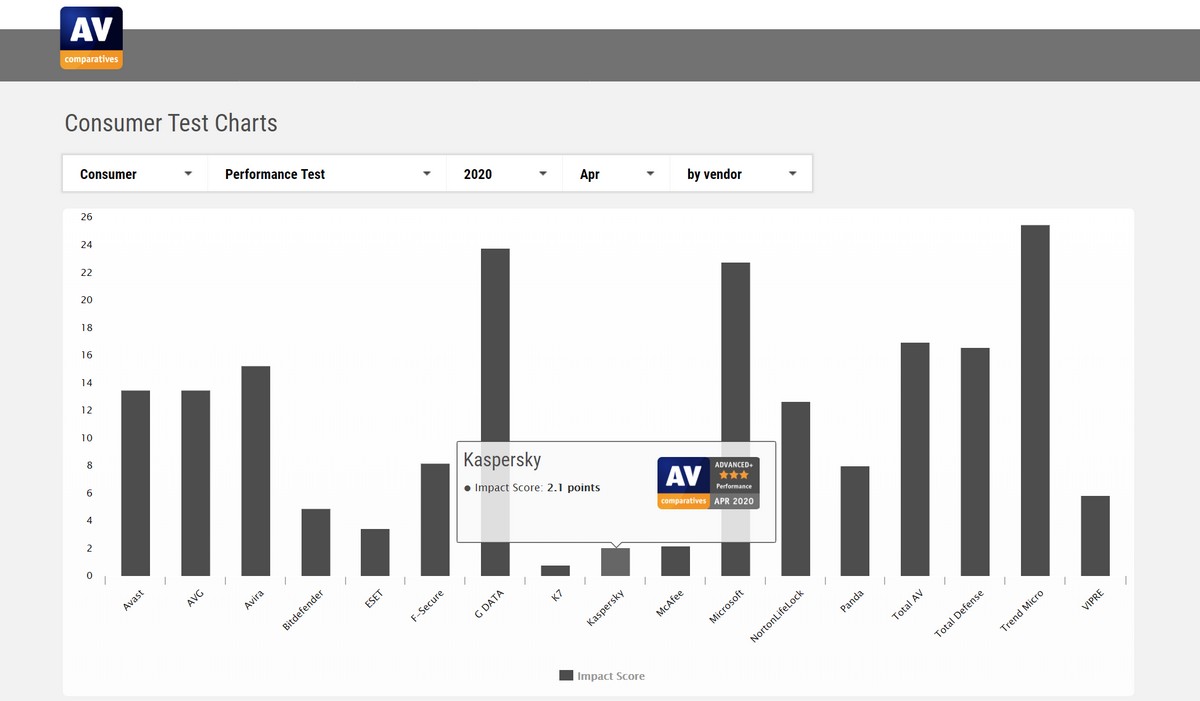

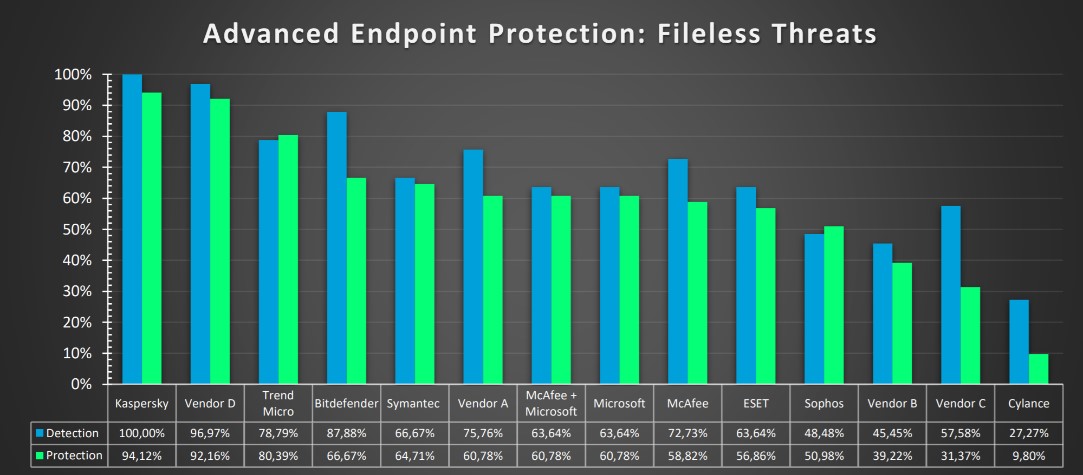

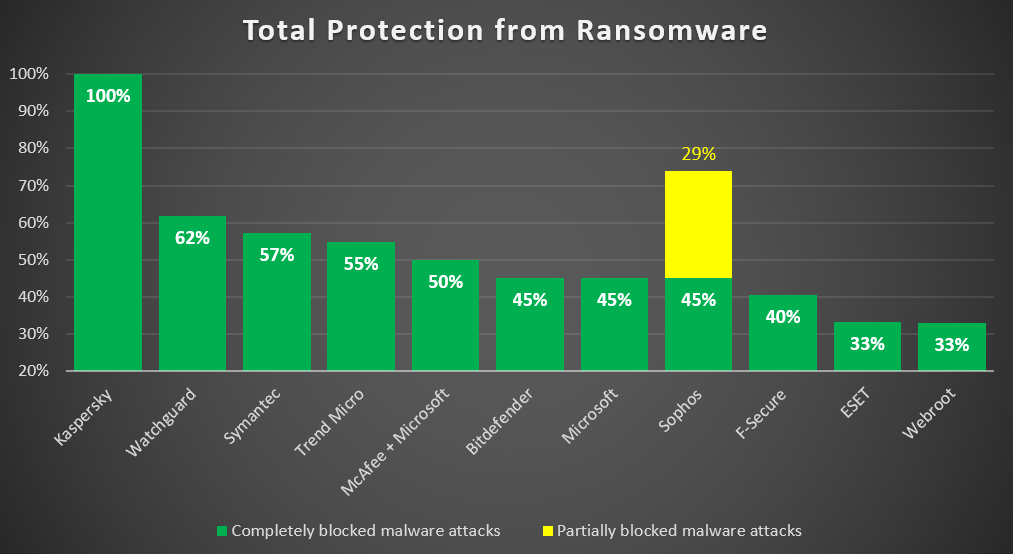

Recently I wrote a post showing how we beat demolished all competition (10 other popular cybersecurity products) in testing for protection against ransomware – today the most dangerous cyber-evil of all. So how do we get top marks on quality of protection and speed? Simple: by having the best technologies, plus the most no-compromise detection stance, multiplied by optimization ).

But, particularly against ransomware, we’ve gone one further: we’ve patented new technology for finding unknown ransomware with the use of smart machine-learning models. Oh yes.

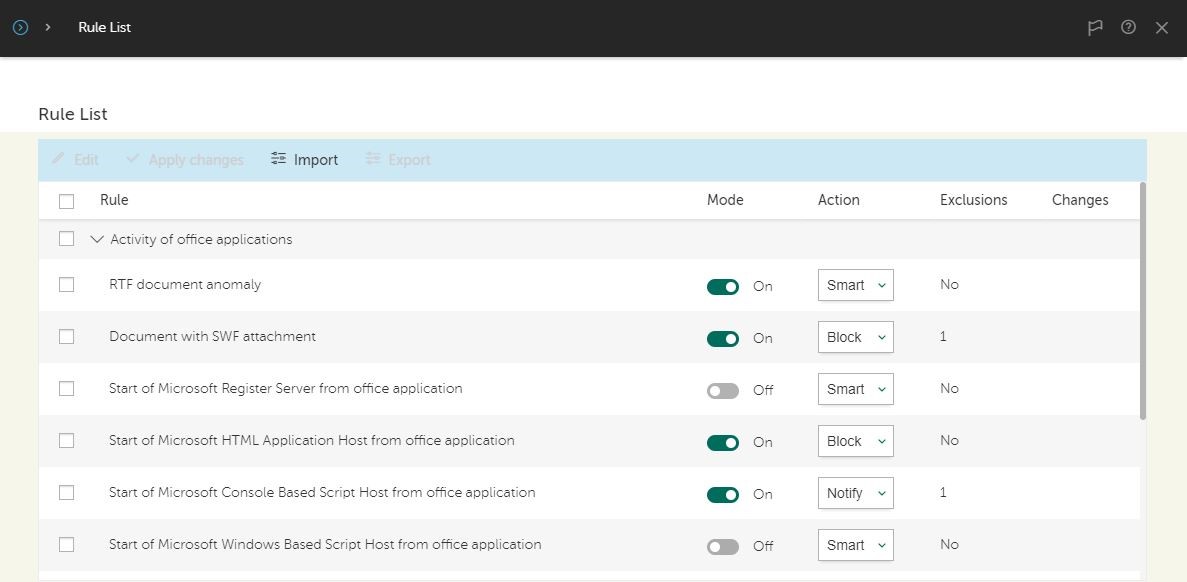

The best protection from cyberattacks is multi-level protection. And not simply using different protective tools from different developers, but also at different stages of malware’s activity: penetration, deployment, interaction with the command center, and launch of the malicious payload (and this is how we detect the tiniest of hardly-noticeable anomalies in the system, analysis of which leads to the discovery of fundamentally new cyberattacks).

Now, in the fight against ransomware, protective products traditionally underestimate final stage – the stage of the actual encryption of data. ‘But, isn’t it a bit late for a Band-Aid?’, you may logically enquire ). Well, as the testing has shown (see the above link) – it is a bit too late for those products that cannot roll back malware activity; not for products that can and do. But you only get such functionality on our and one other (yellow!) product. Detecting attempts at encryption is the last chance to grab malware red-handed, zap it, and return the system to its original state!

Ok, but how can you tell – quickly, since time is of course of the essence – when encryption is taking place?