The world seems to be slowly opening back up – at least a little, at least in some places. Some countries are even opening up their borders. Who’d have thought it?

Of course, some sectors will open up slower than others, like large-scale events, concerts and conferences (offline ones – where folks turn up to a hotel/conference center). Regarding the latter, our conferences too have been affected by the virus from hell. These have gone from offline to online, and that includes our mega project the Security Analyst Summit (SAS).

This year’s SAS should have taken place this April in one of our favorite (for other K-events) host cities, Barcelona. Every year – apart from this one – it takes place somewhere cool (actually, normally quite hot:); for example, it was in Singapore in 2019, and Cancun, Mexico, in 2018. We’d never put on a SAS in Barcelona though, as we thought it might not be ‘fun’ or ‘exotic’ enough. But given that folks just kept on suggesting the Catalonian city as a venue, well, we finally gave in. Bit today, in May, we still haven’t had a SAS in Barcelona, as of course the offline, planned one there had to be postponed. But in its place we still had our April SAS – only on everyone’s sofa at home online! Extraordinary measures for extraordinary times. Extraordinarily great the event turned out to be too!

But we’re still planning on putting on the offline SAS in Barcelona – only later on, covid permitting. But I’m forever the optimist: I’m sure it will go ahead as planned.

It turns out there are quite few upsides to having a conference online. You don’t have to fly anywhere, and you can view the proceedings all while… in bed if you really want to! The time saved and money saved are really quite significant. I myself watched everything from a quiet corner of the flat (after donning my event t-shirt to get into SAS mode!). There were skeptics, however: an important element of any conference – especially such a friendly, anti-format one like SAS – is the live, human, face-to-face interaction, which will never be replaced by video conferencing.

I was really impressed with how things went. Kicking it off we had more than 3000 folks registered, out of which more than a thousand were actually watching it any one time over the three days – peaking sometimes above 2000. Of course most would have picked and chosen their segments to watch instead of watching it non-stop. The newly introduced training sessions, too, were well attended: around 700 for all of them – a good indicator folks found them interesting.

And for SAS@Home a special program had been prepared – and all in just two weeks! Why? Well, the heart of our conference is hardboiled, hardcore geekfest techy stuff: very detailed investigations and reports from the world’s top cybersecurity experts. But for SAS@Home the audience was to be bigger in number, and broader in audience profile – not just tech-heads; so we experimented – we placed an emphasis on a learning program, not in place of the detailed investigations and reports, but in addition to them.

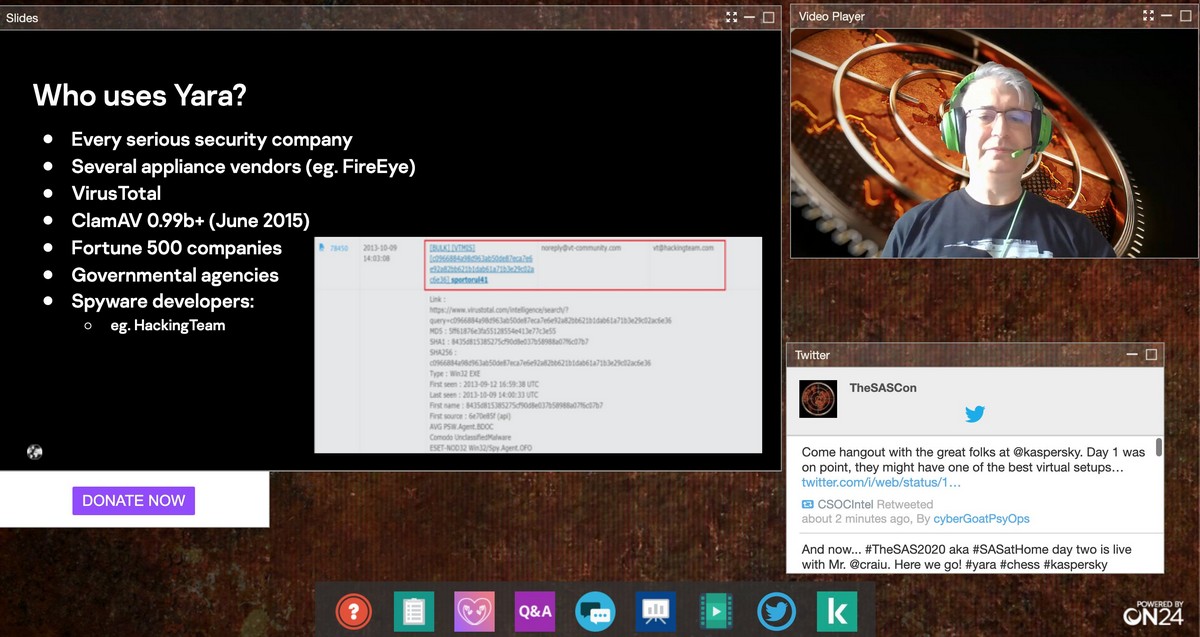

And we seemed to have gotten the balance just right. There was the story of the Android Trojan PhantomLance in Google Play, which for several years attacked Vietnamese Android users. There were presentations on network security and zero-day vulnerabilities. On the second day there was the extraordinarily curious talk by our GReAT boss, Costin Raiu, about YARA rules, with a mini-investigation about chess as a bonus!

After that there was Denis Makrushkin on bug-hunting and web applications. And on the third day things got really unusual. It’s not every cybersecurity conference where you can hear about nuances of body language; or where – straight after that comes selecting methods of statistical binary analysis! But at SAS – par for the course ).

As per tradition, a huge thanks to everyone who helped put on the show: all the speakers, the organizers, the partners from SecurityWeek, the viewers, the online chatters, and the tweeters. And let’s not forget the flashmob we launched during SAS – quarantunities – dedicated to what folks have been getting up to during lockdown at home, including someone starting to cook every day, someone learning French, and someone else switching from life in the metropolis to that in the countryside.

In all, a great success. Unexpected format, but one that worked, and then some. Now, you’ll no doubt be tiring a little of all the positivity-talk of late about using the crisis and lockdown to one’s advantage. Thing is, in this instance, I can’t do anything but be positive, as it went so unbelievably well! Another thing: ‘We’ve had a meeting, and I’ve decided’ (!) that this online format is here to stay – even after covid!

Finally, one last bit of positivism (really – the last one, honest :). As our experts David Jacoby and Maria Namestnikova both pointed out during the final session, there are other positive things that have come out of quarantining at home: more folks are finding the time to stay fit with home exercise routines; there’s an emphasis being put on physical health generally (less rushing about and grabbing sandwiches and takeaways, etc.); folks are helping each other more; and levels of creativity are on the rise. Indeed, I’ve noticed all those things myself too. Nice. Positive. Eek ).

That’s all from me for today folks. And that’s all from SAS until we finally get to sunny Barcelona. Oh, and don’t forget…: another one for your diary for next year: SAS@home-2021!

PS: Make sure to subscribe – and click the bell for notifications!! – to our YouTube channel: we’ll be putting up there recordings of all sessions gradually. Yesterday the first one was published!…