“Patents against innovation”. Sounds as paradoxical as “bees against honey”, “hamburger patties against buns”, “students against sex” or “rock ‘n’ roll against drugs”.

Patents against innovation? How can that be possible? Patents exist to protect inventors’ rights, to provide a return on R&D investment, and generally to stimulate technological progress. Well, maybe it’s like that for some things, but in today’s software world – no way.

Today’s patent law regarding software is…well, it’s a bit like one of those circus mirrors where reality is distorted. Patent law is now just so far removed from common sense that it’s patently absurd; the whole system right down to its roots needs to be overhauled. ASAP! Otherwise innovative patents meant to encourage and protect will simply fail to materialize. (Good job, patent system. Stellar work.)

So how did everything end up so messed up?

Well, despite the virtuous original intention of patents to protect inventors – today they’ve mainly turned into nothing more than an extortion tool, whose objective is just the opposite of protecting innovation. The contemporary patent business is a technological racket – a cross-breed between… a thieving magpie and a kleptomaniac monkey – with a malicious instinct to drag anything of value back to its lair.

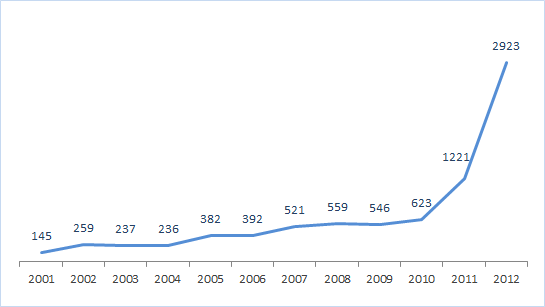

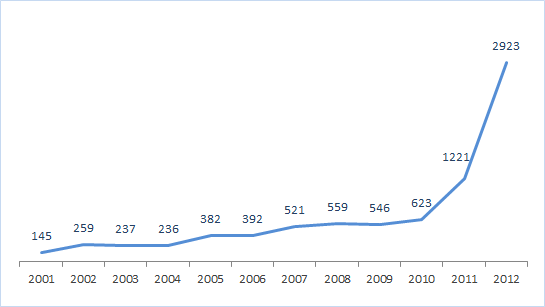

Growth in the number of patent lawsuits with the participation of “trolls“

Source: PatentFreedom

Now for some detail. Let’s have a closer look at the patent business.

More: aggregators, trolls and pools …