Around the turn of the century we released the LEAST successful version of our antivirus products – EVER! I don’t mind admitting it: it was a mega-fail – overall. Curiously, the version also happened to be mega-powerful too when it came to protection against malware, and had settings galore and all sorts of other bells and whistles. The one thing that let it down though was that it was large and slow and cumbersome, particularly when compared with our previous versions.

I could play the subjunctiveness game here and start asking obvious questions like ‘who was to blame?’, ‘what should have been done differently?’, etc., but I’m not going to do that (I’ll just mention in passing that we made some very serious HR decisions back then). I could play ‘what if’: who knows how different we as a company would be now if it wasn’t for that foul-up? Best though I think is to simply state how we realized we’d made a mistake, went back to the drawing board, and made sure our next version was way ahead of the competiton on EVERYTHING. Indeed, it was the engine that pushed us into domination in global antivirus retail sales, where our share continues to grow.

That’s right, our post-fail new products were ahead of everybody else’s by miles, including on performance, aka efficiency, aka how much system resources get used up during a scan. But still that… stench of sluggishness pursued us for years. Well, frankly, the smelliness is still giving us some trouble today. Memories are long, and they often don’t listen to new facts :). Also, back then our competitors put a lot of effort into trolling us – and still try to do so. Perhaps that’s because there’s nothing else – real nor current – to troll us for :).

Now though, here… time for some well-overdue spring cleaning. It’s time to clear up all the nonsense that’s accumulated over the years re our products’s efficiency once and for all…

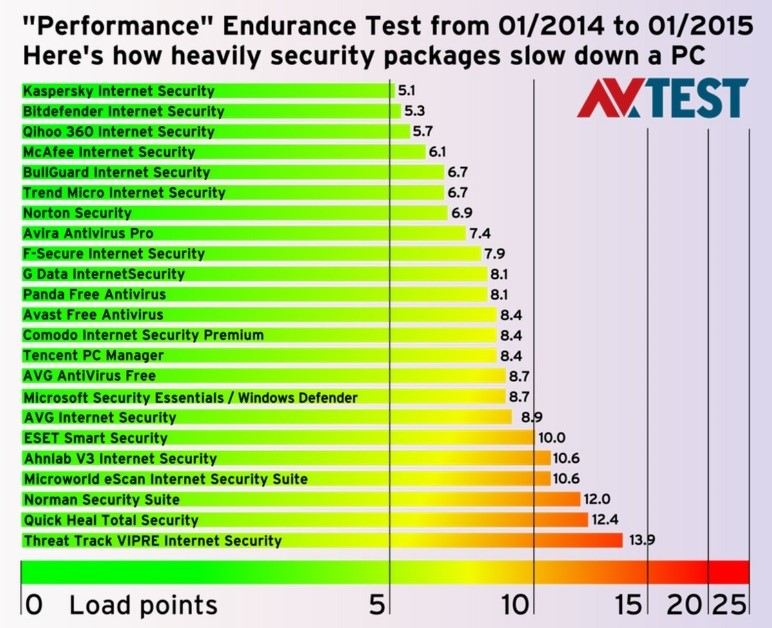

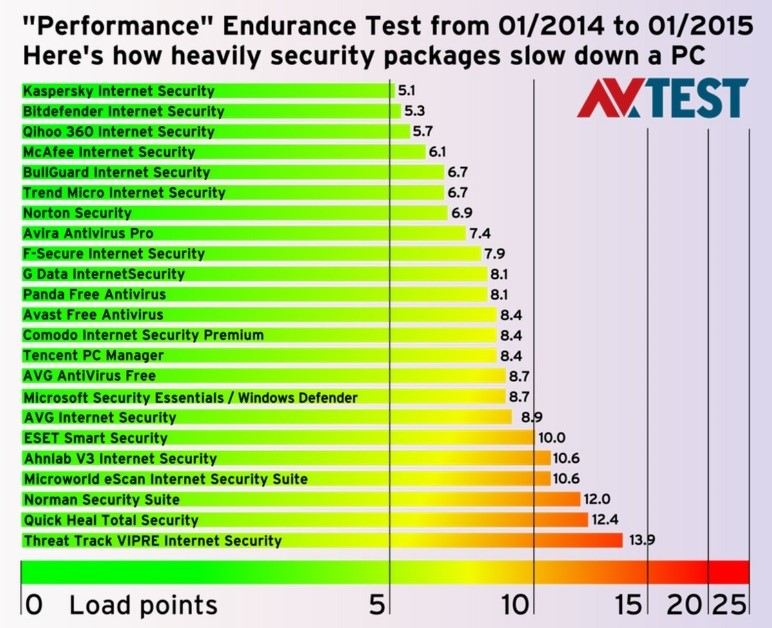

Righty. Here are the results of recent antivirus product performance tests. Nothing but facts from a few respected testing labs – and it’s great food for thought. Have a look at the other vendors’ results, compare, and draw your own conclusions:

1. AV–Test.org

I’ve said many times that if you want to get the truly objective picture, you need to look at the broadest possible range of tests from the longest possible historical perspective. There are notorious cases of certain vendors submitting ‘cranked up’ versions optimized for specific tests to test labs instead of the regular ‘working’ versions you get in the shops

The guys from the Magdeburg lab have done one heck of a job in analyzing the results achieved by 23 antivirus products during the past year (01/2014 – 01/2015) to determine how much each product slowed the computer down.

No comment!

Read on: a valuable advice to assess test results…