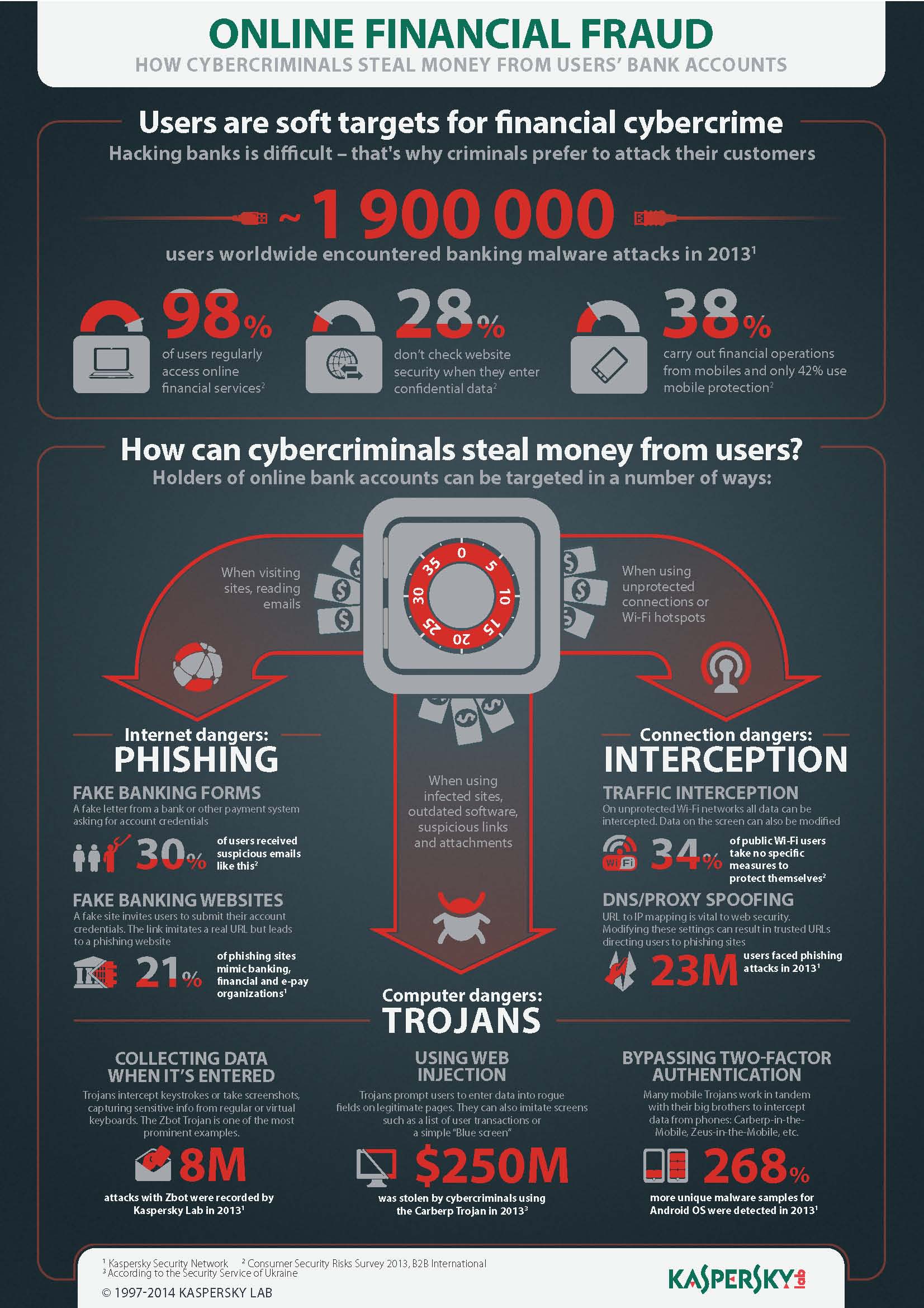

In just a dozen or so years the computer underground has transformed itself from hooliganistic adolescent fun and games (fun for them, not much fun for the victims) to international organized cyber-gangs and sophisticated state-sponsored advanced persistent threat attacks on critical infrastructure. That’s quite a metamorphosis.

Back in the hooliganistic era, for various reasons the cyber-wretches tried to infect as many computers as possible, and it was specifically for defending systems from such massive attacks that traditional antivirus software was designed (and did a pretty good job at). These days, new threats are just the opposite. The cyber-scum know anti-malware technologies inside out, try to be as inconspicuous as possible, and increasingly opt for targeted – pinpointed – attacks. And that’s all quite logical from their business perspective.

So sure, the underground has changed; however, the security paradigm, alas, remains the same: the majority of companies continue to apply technologies designed for mass epidemics – i.e., outdated protection – to tackle modern-day threats. As a result, in the fight against malware companies maintain mostly reactive, defensive positions, and thus are always one step behind the attackers. Since today we’re increasingly up against unknown threats for which no file or behavioral signatures have been developed, antivirus software often simply fails to detect them. At the same time contemporary cyber-slime (not to mention cyber military brass) meticulously check how good their malicious programs are at staying completely hidden from AV. Not good. Very bad.

Such a state of affairs becomes even more paradoxical when you discover that in today’s arsenals of the security industry there do exist sufficient alternative concepts of protection built into products – concepts able to tackle new unknown threats head-on.

I’ll tell you about one such concept today…

Now, in computer security engineering there are two possible default stances a company can take with regard to security: “Default Allow” – where everything (every bit of software) not explicitly forbidden is permitted for installation on computers; and “Default Deny” – where everything not explicitly permitted is forbidden (which I briefly touched upon here).

As you’ll probably be able to guess, these two security stances represent two opposing positions in the balance between usability and security. With Default Allow, all launched applications have a carte-blanche to do whatever they damn-well please on a computer and/or network, and AV here takes on the role of the proverbial Dutch boy – keeping watch over the dyke and, should it spring a leak, frenetically putting his fingers in the holes (with holes of varying sizes (seriousness) appearing regularly).

With Default Deny, it’s just the opposite – applications are by default prevented from being installed unless they’re included on the given company’s list of trusted software. No holes in the dyke – but then probably no excessive volumes of water running through it in the first place.

Besides unknown malware cropping up, companies (their IT departments in particular) have many other headaches connected with Default Allow. One: installation of unproductive software and services (games, communicators, P2P clients… – the number of which depends on the policy of a given organization); two: installation of unverified and therefore potentially dangerous (vulnerable) software via which the cyber-scoundrels can wriggle their way into a corporate network; and three: installation of remote administration software, which allows access to a computer without the permission of the user.

Re the first two headaches things should be fairly clear. Re the third, let me bring some clarity with one of my EK Tech-Explanations!

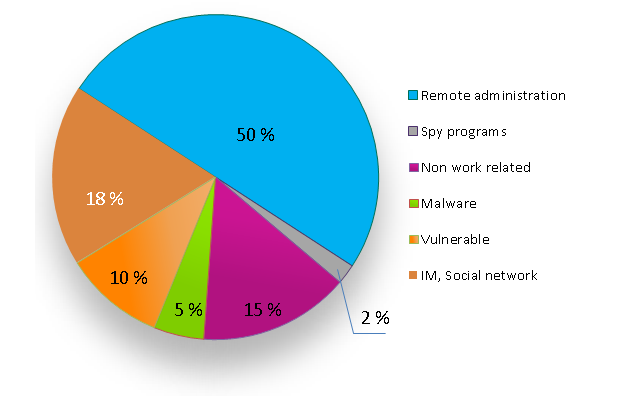

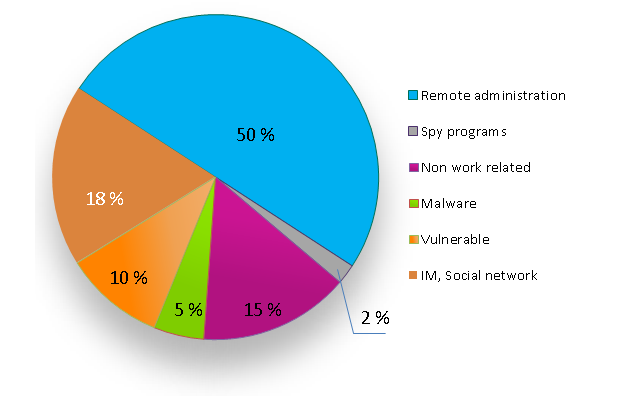

Not long ago we conducted a survey of companies in which we posed the question, “How do employees violate adopted IT-security rules by installing unauthorized applications?” The results we got are given in the pie-chart below. As you can see, half the violations come from remote administration. By this is meant employees or systems administrators installing remote control programs for remote access to internal resources or for accessing computers for diagnostics and/or “repairs”.

More: The figures speak for themselves: it’s a big problem …