May 13, 2020

Cyber-yesteryear – pt. 1: 1989-1991.

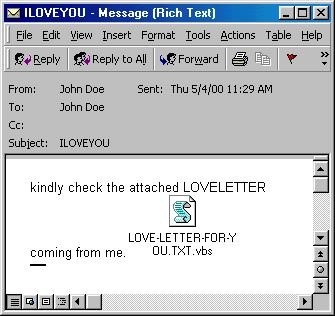

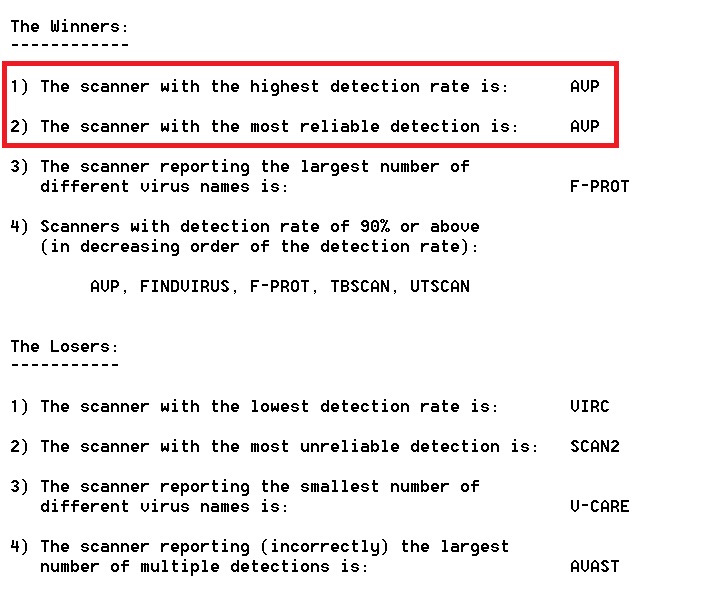

Having written a post recently about our forever topping the Top-3 in independent testing, I got a bit nostalgic for the past. Then, by coincidence, there was the 20th anniversary of the ILOVEYOU virus worm: more nostalgia, and another post! But why stop there, I thought. Not like there’s much else to do. So I’ll continue! Thus, herewith, yet more K-nostalgia, mostly in a random order as per whatever comes into my head…

First up, we press rewind (on the 80s’ cassette player) back to the late 1980s, when Kaspersky was merely my surname ).

Part one – prehistorical: 1989-1991

I traditionally consider October 1989 as when I made my first real steps in what turned out to be my professional career. I discovered the Cascade virus (Cascade.1704) on an Olivetti M24 (CGA, 20M HDD) in executable files it had managed to infiltrate, and I neutralized it.

The narrative normally glosses over the fact that the second virus wasn’t discovered by me (out of our team) but Alexander Ivakhin. But after that we started to ‘woodpeck’ at virus signatures using our antivirus utility (can’t really call it a ‘product’) regularly. Viruses would appear more and more frequently (i.e., a few a month!), I would disassemble them, analyze them, classify them, and enter the data into the antivirus.

But the viruses just kept coming – new ones that chewed up and spat out computers mercilessly. They needed protecting! This was around the time we had glasnost, perestroika, democratization, cooperatives, VHS VCRs, Walkmans, bad hair, worse sweaters, and also the first home computer. And as fate would have it, a mate of mine was the head of one of the first computer cooperatives, and he invited me to come and start exterminating viruses. I obliged…

My first ‘salary’ was… a box of 5″ floppy disks, since I just wasn’t quite ready morally to take any money for my services. Not long afterward though, I think in late 1990 or early 1991, the cooperative signed two mega-contracts, and I made a tidy – for the times – sum out of both of them.

The first contract was installation of antivirus software on computers imported to the USSR from Bulgaria by a Kiev-based cooperative. Bulgarian computers back then were plagued by viruses, which made a right mess of data on disks; the viruses, btw, were also Bulgarian.

The second contract was for licensing antivirus technologies in a certain mega-MS-DOS-based system (MS Office’s ~equivalent back then).

What I spent my first ‘real’ money on?… I think it was a VCR. And a total waste of money that was. I never had the time for watching movies, let alone recording stuff and watching it again. My family weren’t big into videos either. Oof. (Btw: a good VCR back then cost… the same as a decent second-hand Lada!)

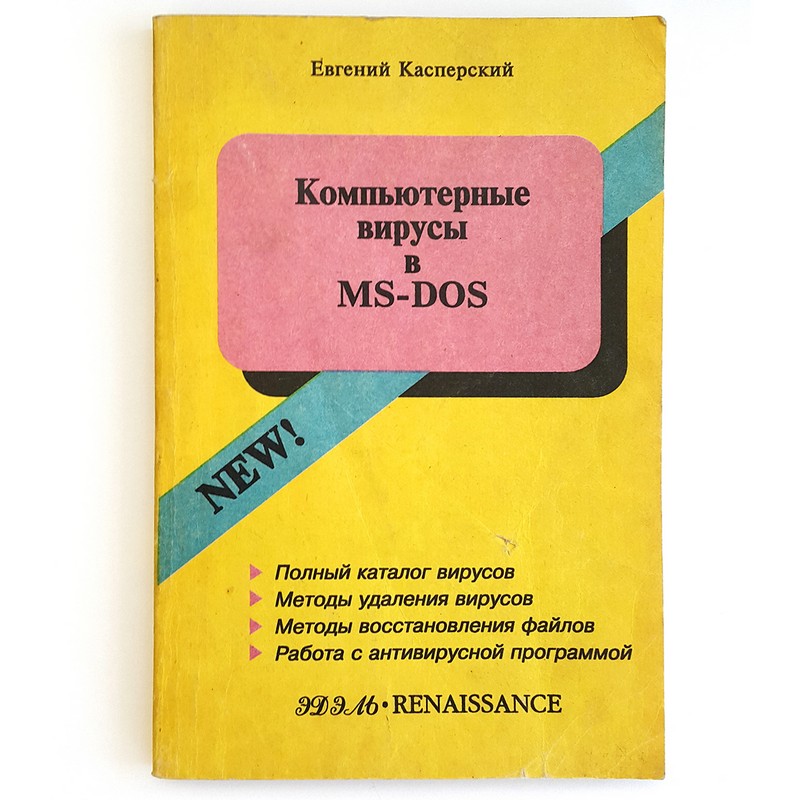

My ~second purchase was a lot more worthwhile – several tons of paper for the publication of my first book on computer viruses. Btw: just after this buy the Pavlov Reform kicked in, so it was just as well I’d spent all my rubles – days later a lot of my 50 and 100-ruble notes would have been worthless! Lucky!

My book was published in the spring of 1991. Alas, it hardly sold – with most copies gathering dust in some warehouse no doubt. I think so anyway; maybe it did sell: I haven’t found a copy anywhere since, and in the K archive we only have one copy (so if anyone has another copy – do let me know!). Another btw, btw: I was helped immensely by a certain Natalya Kasperskaya back then in the preparation of the book. She was at home juggling looking after two little ones and editing it over and over; however, I think it must have piqued her curiosity in a good way – she warmed to the antivirus project and went on to take a more active part.

That pic there is of my second publication. The single copy of the first one – just mentioned – is at the office, and since we’re taking this quarantine thing seriously, I can’t physically take a pic of it (.

Besides books, I also started writing articles for computer magazines and accepting occasional speaking opportunities. One of the clubs I was speaking at would also send out shareware on diskettes by post. It was on such diskettes that the early versions of our antivirus – ‘-V by doctor E. Kasperski’ (later known as ‘Kaspersky’:) appeared (before this, the only users of the antivirus were friends and acquaintances).

The main differences between my antivirus… utility and the utilities of others (there’s no way these could ever be called ‘products’) were, first: it had a proper user interface – in the pseudo-graphics mode of MS-DOS – which even (!) supported the use of a mouse. Second: it featured ‘resident guard’ and utilities for the analysis of system memory to search for hitherto unknown resident MS-DOS viruses (this was back before Windows).

The oldest saved version of this antivirus is the -V34 from September 12, 1990. The number ’34’ comes from the number of viruses found! Btw: if anyone has an earlier version – please let me know, and in fact any later versions too – besides -V.

The antivirus market back then didn’t exist in Russia, unless you can call Dmitry Lozinsky’s ‘Aidstest’ on a diskette for three rubles a market. We tried to organize sales via various computer cooperatives or joint ventures, but they never came to much.

So I had to settle into my role, in 1990-1991, as a freelance antivirus analyst, though no one had heard of such a profession. My family wasn’t too impressed, to say the least, especially since the CCCP was collapsing, and a pertinent question ‘discussed in kitchens’ [no one did cafes/restaurants/bars for their meet-ups and chit-chats back then: there weren’t many in the first place, and not many folks had the money to spend in them even if they had] would be something like: ‘where’s all the sugar gone from the shop shelves?’ Tricky, tough times they were; but all the more interesting for it!

To be continued!…