January 24, 2013

All Mouth, No Trouser.

“All animals are equal, but some are more equal than others.” Thus spake Napoleon, the head-hog in Orwell’s dystopian classic.

The genius of this phrase lies in its universality – a small addition turns the truth inside out. Alas, this witty paradox [sic.] is met not only in farmer-revolutionary sagas, but also in such (seemingly very distant) themes as – and you won’t believe this – antivirus tests! Thus, “All published AV-test results are equal, but some are more equal than others.” Indeed, after crafty marketing folk have applied their magic and “processed” the results of third-party comparative AV tests, the final product – test results as published by certain AV companies – can hardly be described as equal in value: they get distorted so much that nothing of true value can be learned from them.

Let’s take an imaginary antivirus company – one that hardly distinguishes itself from its competitors with outstanding technological prowess or quality of protection, but which has ambitions of global proportions and a super-duper sales plan to fulfill them. So, what’s it gonna first do to get nearer its plan for global domination? Improve its antivirus engine, expand its antivirus database, and/or turbo charge its quality and speed of detection? No, no, no. That takes faaaar too much time. And costs faaaar too much money. Well, that is – when you’re in the Premiership of antivirus (getting up to the First Division ain’t that hard). But the nearer the top you get in the Champions League in terms of protection, the more dough is needed to secure every extra hundredth of a real percent of detection, and the more brains it requires.

It’s much cheaper and quicker to take another route – not the technological one, but a marketing one. Thus, insufficient technological mastery and quality of antivirus detection often gets compensated by a cunning informational strategy.

But how?

Indirectly; that’s how…

Now, what’s the best way to evaluate the quality of the protection technologies of an antivirus product? Of course it’s through independent, objective opinion by third parties. Analysts, clients and partners give good input, but their impartiality naturally can’t be guaranteed. Comparative tests conducted by independent, specialized testing labs are where the real deal’s at. However, testers are peculiar beasts: they concentrate purely on their narrow trade – that’ll be testing – which is good, as testing done well – i.e., properly and accurately – is no easy task. But their results can often come across as… slightly dull, and could do with a bit of jazzing up. Which is where testing marketing done by those who order the testing kicks in: cunning manipulation of objective test results – to make the dirty-faced appear as angels, and/or the top-notchers appear as also-rans. It all becomes reminiscent of the ancient Eastern parable about the blind men and the elephant. Only in this case the marketing folk – with perfect eyesight – “perceive” the results deliberately biasedly. The blind men couldn’t help their misperceptions.

There’s nothing criminal of course in manipulating test results. It’s just very difficult for users to be able to separate the wheat from the chaff. Not good. Example: It’s easy to pluck from several nerdy and not overly professional tests selective data on, say, system resource usage of one’s darling product, while keeping shtum about the results of (vastly more important) malware detection. Then that super system resource usage – and it only – is compared with the resource usage of competitors. Next, all marketing materials bang on ad nauseam about that same super system resource usage and this is deemed exemplary product differentiation! Another example: An AV company’s new product is compared with older versions of competitors’ products. No joke! This stuff really does happen. Plenty. Shocking? Yes!

Thing is, no lies are actually being peddled in all of this – all it is is selective extraction of favorable data. The meddling isn’t meddling of formal, fixed criteria – it’s just meddling with ethical criteria. And since there are thus no legal routes to get “justice”, what’s left is for us to call upon you to be on your guard and carefully filter the marketing BS that gets bandied about all over the place. Otherwise, there’s a serious risk of winding up with over-hyped yet under-tech’ed AV software, and of your losing faith in tests and in the security industry as a whole. Who needs that? Not you. Not anyone.

The topic of unscrupulous testing marketing has been discussed generally here before. Today, let’s examine more closely the tricks marketing people use in plying their dubious trade, and how it’s possible to recognize and combat them. So, let me go through them one by one…

1) Use of “opaque” testing labs to test the quality of malware detection. This is perhaps the simplest and least risky ruse that can be applied. It’s particularly favored by small antivirus companies or technologically deficient vendors. They normally find a one-man-show, lesser known “testing center” with no professional track record. They’re cheap, the methodology usually isn’t disclosed, checking the results is impossible, and all reputational risks are for the testing center itself (and it has no problem with this).

Conclusion: If a test’s results don’t give a description of the methodology used, or the methodology contains serious “bugs” – the results of such a test cannot be trusted.

2) Using old tests. Why go to all that trouble year after year when it’s possible every two or three years to win a test by chance, and then for several years keep wittering on about that one-off victory as if it was ostensive proof of constant superiority?

Conclusion: Check the date of a test. If it’s from way back, or there’s no link to the source (public results of the test with their publication date) – don’t trust such a test either.

3) Comparisons with old versions. There are two possibilities here. First: Comparisons of old products across the board – among which the given AV company’s product magically pulled a rare victory. Of course, in the meantime, the steamships of the industry have been sailing full steam ahead, with the quality of virus detection advancing so much as to be unrecognizable. Second: Comparisons of the given AV company’s product with old versions of products of competitors. Talk about below the belt!

Conclusion: Carefully cast an eye on the freshness of product versions. If you find any discrepancies, wool is most certainly being pulled over the proverbials. Forget these “test results” too.

4) Comparing the incomparable. How best to demonstrate your technological prowess? Easy! To compare the incomparable in one or two carefully chosen areas, naturally. For example, products from different categories (corporate and home), or fundamentally differing protection technologies (for example, agentless and agent-based approaches to protection of virtual environments).

Conclusion: Pay attention not only to the versions and release dates of compared products, but also to the product titles!

5) Over-emphasizing certain characteristics while not mentioning others. Messed up a test? Messed up all tests? No problem. Testing marketing will sort things out. The recipe’s simple: Take a specific feature (usually high scan speed or low system resource usage (the usual features of hole-ridden protection)), pull only this out of all the results of testing, and proceed to emblazon it on all your banners all around the globe and proudly wave them about as if they represent proof of unique differentiation. Here’s a blatant example.

Conclusion: if they bang on about “quickly, effectively, cheaply” – it’s most likely a con-job. Simple as that.

6) Use of irrelevant methodologies. Here there’s normally plenty of room for sly maneuver. Average users usually don’t (want to) get into the details of testing methodologies (as they’ve better things to do) but, alas, the devil’s always in the details. What often happens is that tests don’t correspond to real world conditions and so don’t reflect the true quality of antivirus protection of products in that same real world. Another example: Subjective weightings of importance given to test parameters.

Conclusion: if apples and pears are being compared – you might as well compare the results of such testing with… the results of toilet-flushing loudness testing: the usefulness of the comparisons will be about the same.

7) Selective testing. This takes some serious analytical effort to pull off and requires plenty of experience and skill in bamboozlement/spin/flimflam :) Here some complex statistical-methodological know-how is applied, where the strong aspects of a product are selectively pulled out of different tests, and then one-sided “comparative” analysis is “conducted” using combinations of the methods described above. A barefaced example of such deception is here.

Conclusion: “If you’re going to lie, keep it short”©! If they go on too long and not very on-theme – well, it’s obvious…

8) Plain cheating. There are masses of possibilities here. The most widespread is stealing detection and fine-tuning products for specific tests (and onwards as per the above scenarios). In AV industry chat rooms a lot is often talked about other outrageous forms of cheating. For example, it was claimed that one unnamed developer gave testers a special version of its product tailored to work in their particular testing environment. The trick was easy: to compensate for its inability to detect all infected files in the test bed the product detected just about all files it came across. Of course as a side effect this produced an abnormal number of false positives – but guess what? The resultant marketing materials never contained a single word about them. Nice! Not.

Conclusion: Only professionals can catch cheaters out. Average users can’t, unfortunately. So what’s to be done? Look at several competing tests. If in one test a certain product shows an outstanding result, while in others it bombs – it can’t be ruled out that the testers were simply deceived somehow.

9) And the last trick – also the simplest: to refuse to participate in testing. Or to forbid testers to name products with their actual names, and instead hide behind “Vendor A”, “Vendor B”, etc. So why take part in the first place if the results of testing will prove that the emperor indeed wears no clothes? If a title disappears from lists of tested products, is it possible to trust such a product? Of course not.

Conclusion: Carefully check whether a given product takes part in all public tests worthy of trust. If it only takes part in those where its merits are shown and no/few shortcomings – crank up the suspiciousness. Testing marketing could again be at work doing its shady stunts.

By the way, if any readers of this blog might want to investigate different antivirus test results more closely – and you find any KL testing marketing doing any of the above-mentioned monkey business – don’t be shy: please fire your flak in this direction in the comments. I promise to make amends and to… have a quiet (honest!) word with those responsible :)

And now – to recap, briefly: what a user should do, who to believe, and how to deal with hundreds of tables, tests and charts. (Incidentally, this has already been dealt with – here). So, several important rules for “reading” test results in order to expose crooked testing marketing:

- Check the date of a test, and the versions and features of the tested products.

- Check the history of “appearances” of a product in tests.

- Don’t focus on a specific feature. Look at the whole spectrum of capabilities of a product – most importantly, the quality of protection.

- Have a look at the methodology and check the reputation of the testing lab used.

The last point (4) won’t really concern all readers, maybe just specialists. For non-specialists, I also recommend getting to know the below list of testing centers. These are respected teams, with many years of experience in the industry, who work with tried and tested and relevant methodologies, and who fully comply with AMTSO (the Anti-Malware Testing Standards Organization) standards.

But first a disclaimer – to head off the trolling potential from the outset: we don’t always take top place in these testers’ tests. It’s only their professionalism that I base my recommendations on.

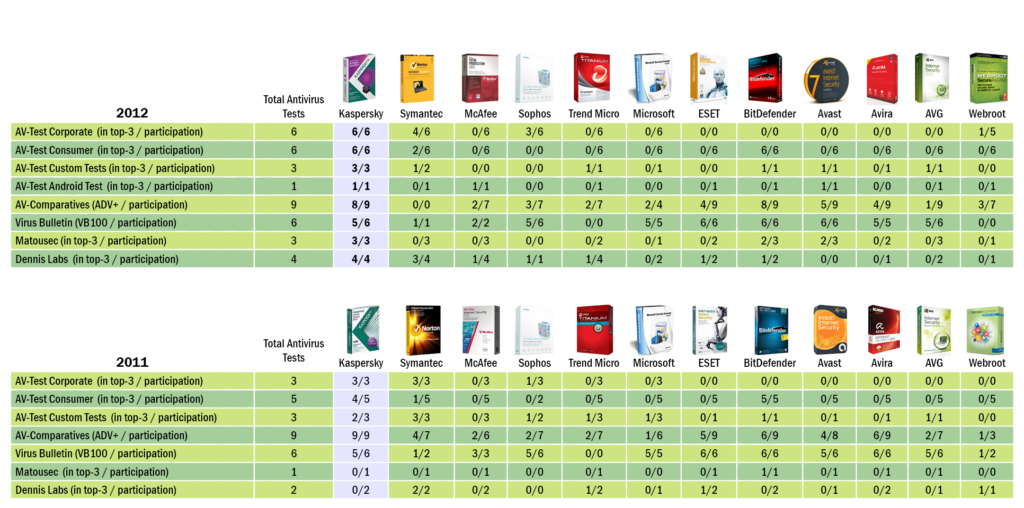

And here – a final power chord: the ranking of testing centers for 2011-2012. Some interesting background for those who yearn for more info:

And really finally finally – already totally in closing, to summarize the above large volume of letters:

Probably someone will want to have a pop at me and say the above is my having a cheap pop at competitors. In actual fact the main task here – for the umpteenth time – is to (re)submit for public discussion an issue about which a lot has often been said for ages already, but for which a solution hasn’t appeared. Namely: that still to this day there exist no established and intelligible methodologies for conducting comparative testing of antivirus products agreed upon by all across the board. The methodologies that do exist are alas not fully understood – not only by users, but also by developers!

In the meantime, let’s just try and get along, but not close our eyes to the problem that faces this field, all the while calming ourselves with the knowledge that how far “creative manipulation” of statistics goes normally at least lies within certain reasonable limits – for every developer. As always, users simply need to make their choices better by digging deeper to find real data – separating wheat from chaff. Alas, not everyone has the time or patience for such an undertaking. Understandable. Shame.

Last words: Be alert!