September 10, 2019

i-Closed-architecture and the illusion of unhackability.

The end of August brought us quite a few news headlines around the world on the cybersecurity of mobile operating systems; rather – a lack of cybersecurity of mobile operating systems.

First up there was the news that iPhones have been getting attacked for a full two years (!) via a full 14 vulnerabilities (!) in iOS-based software. To be attacked, all a user had to do was visit one of several hacked websites – nothing more – and they’d never know anything about it.

But before all you Android heads start with the ‘nah nana nah nahs’ aimed at the Apple brethren, the very same week the iScandal broke, it was reported that Android devices had been targeted by (possibly) some of the same hackers who had been attacking iPhones.

It would seem that this news is just the next in a very long line of confirmations that no matter what the OS, there may always be vulnerabilities that can be found in it that can be exploited by certain folks – be they individuals, groups of individuals, or even countries (via their secret services). But there’s more to this news: it brings about a return to the discussion of the pros and cons of closed-architecture operating systems like iOS.

Let me quote a tweet first that ideally describes the status of cybersecurity in the iEcosystem:

So people were infecting iPhone users for years undetected. I am not really surprised. I am always saying that the reason we have this illusion of iOS security is because the devices are not inspectable and there is little chance you will ever know you are infected.

— Stefan Esser (@i0n1c) August 30, 2019

In this case Apple was real lucky: the attack was discovered by white-hat hackers at Google, who privately gave the iDevelopers all the details, who in turn bunged up the holes in their software, and half a year later (when most of their users had already updated their iOS) told the world about what had happened.

Question #1: How quickly would the company have been able to solve the problem if the information had gone public before the release of the patch?

Question #2: How many months – or years – earlier would these holes have been found by independent cybersecurity experts if they had been allowed access to the diagnostics of the operating system?

To be frank, what we’ve got here is a monopoly on research into iOS. Both the search for vulnerabilities and analysis of apps are made much more difficult by the excessive closed nature of the system. The result is almost complete silence on the security front in iOS. But that silence does not actually mean everything’s fine; it just means that no one actually knows what’s really going on in there – inside those very expensive shiny slabs of aluminum and glass. Even Apple itself…

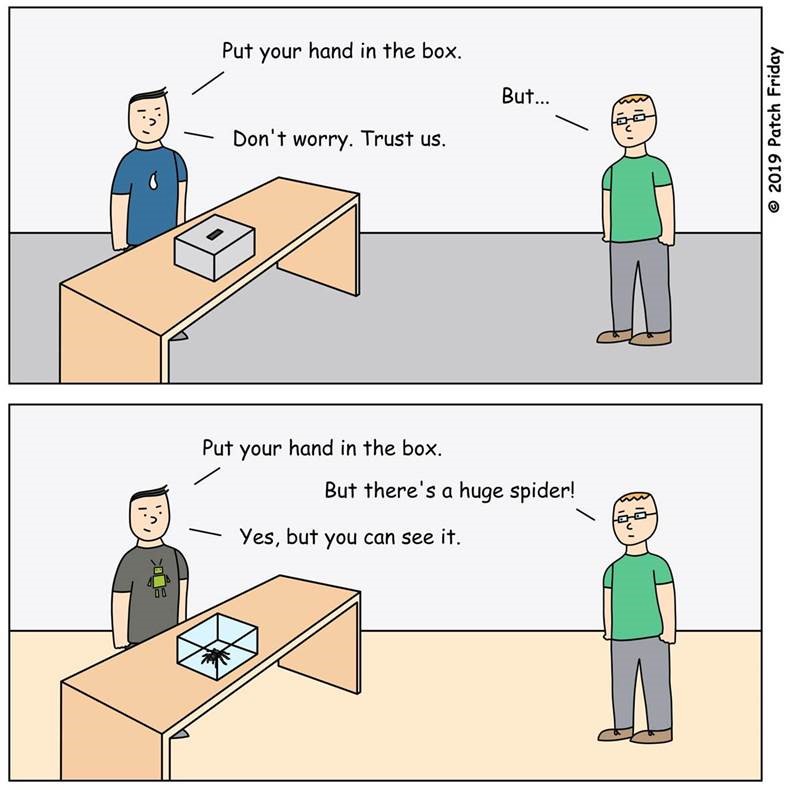

This state of affairs allows Apple to continue to claim it has the most secure OS; of course it can – as no one knows what’s inside the box. Meanwhile, as time passes – yet no independent experts can meaningfully analyze what is inside the box – hundreds of millions of users are just lying in wait helpless until the next wave of attacks hits iOS. Or, put another way – in pictures…:

Now, Apple, to its credit, does put a lot of time and money into increasing security and confidentiality with regard to its products and ecosystems on the whole. Thing is, there isn’t a single company – no matter how large and powerful – can do what the whole world community of cybersecurity experts can combined. Moreover, the most bandied-about argument for iOS being closed to third-party security solutions is that any access of independent developers to the system would represent a potential vector of attack. But that it just nonsense!

Discovering vulnerabilities and flagging bad apps is possible with read-only diagnostic technologies, which can expose malicious anomalies upon analysis of system events. However, such apps are being firmly expelled from the App Store! I can’t see any good reason for this beside fear of losing the ‘iOS research monopoly’… oh, and of course the ability to continue pushing the message that iOS is the most secure mobile platform. And this is why, when iUsers ask me how they’re supposed to actually protect their iDevices, I have just one simple stock answer: all they can do is pray and hope – because the whole global cybersecurity community just ain’t around to help ).