April 11, 2025

AI-Apocalypse Now? Nope, but maybe later…

What exactly is artificial intelligence?

These days the term is slapped on practically anything software-driven that runs automatically. If it can do something by itself, people break into a cold sweat and are ready to swear eternal allegiance to it. In reality, the term “artificial intelligence” has different interpretations depending on the level of self-awareness across various layers of the population of our planet. For example, there are the rather primitive definitions in online encyclopedias, where AI, I quote, “refers to the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making”. This means, for example, that being able to play checkers or chess falls under the AI definition – even if the program can’t do anything else: no bike riding, no potato peeling, no pizza delivery routing…

However, the collective [un]consciousness believes that these IT novelties with “smart” features are indeed the very “digital brain” seen in Hollywood movies – capable of anything and everything, now and forever. It also believes that, soon, we mammals will have to go and hide in caves again – just like in the dinosaur era. Likely? I, for one, don’t think so…

The modern popular belief in an all-powerful AI – it’s all pure nonsense: about as ridiculous as ancient tribes’ terror during thunderstorms – thinking the gods were battling over their divine goals and motivations.

Neural networks, machine learning, ontology, generative AI, and other programming stuff – that’s the current level of “digital intelligence”; nothing more. These are programs or software-hardware systems designed to solve specific tasks – trained, steered, and fine-tuned by human experts. They’re not even trying to be universal – current tech just can’t handle that!

So – my recommendation: take a deep breath in, and then a long one out. Things are fine for now. And probably will be for a good while. All the same – let’s talk about AI…

AI and mass fear

The generative AI sweeping across the planet is stressing out even fairly rational folks – for no good reason. It’s kind of like when the first cars showed up, and coachmen freaked out at the new “horseless carriages”. But no one should be freaking out this time.

Still, when we see a machine effortlessly doing things much faster and better than us, we recall fiction. And there’s plenty of it – from Asimov (The Three Laws of Robotics / I, Robot) and 2001: A Space Odyssey, to the various Terminators. And if you want to dive even deeper into AI-fear, check out The Anti-Aircraft Codes of Al-Efesbi or iPhuck 10 by Pelevin.

After reading such dystopian content, folks start wondering if such horrors are about to become reality.

So what can I say? Sure, machine learning algorithms can do many things much faster and better than us Homo sapiens – who get distracted, anxious, lazy, suffer indigestion, need food and sleep – often during working hours. In our company, such tech has been solving practical cyberthreat-research tasks for almost 20 years now. Without our machine-learning-driven Auto-Woodpecker, we’d need thousands of analysts to process the same volume of data. Instead, we’ve just a couple of experts in our Antivirus Lab per shift.

When should we expect real AI to appear – and will it destroy us?

So, will an AI apocalypse hit us soon? Alright, here’s my take – along with those of a few respected colleagues…

Let’s start with me. Disclaimer: I’m not an AI expert – not even close, but here’s how I see it:

Should we expect strong AI, or artificial general intelligence (AGI) in the next few years?

What do we mean by strong AI? It can already pass the Turing test, do math, draw, code, write poems, play chess – but it’s STILL a long way off from the real thing. It lacks common sense, and has no consciousness (or self-awareness). Creating strong AI is currently impossible: we don’t have the tech, hardware (humanity literally can’t make enough GPUs) or software, and don’t have a clue even as to how it could theoretically work. And today’s generative AI might even be a dead-end for achieving human-level intelligence. Final word from me: nothing to worry about – neither by us, nor our kids.

- Could artificial intelligence destroy humanity?

To me, the odds of real AI destroying humanity are 50% – about the same as running into a dinosaur on the street in that old joke ). Maybe it will destroy it, maybe it won’t. If it ever gets built, it’ll be fascinating to see – but we won’t live to see it.

As for today’s so–called AI, it could destroy humanity if it’s deliberately trained to do so. And it’d complete the task pretty well given good training and tools. But it may hallucinate, mess up, and make funny and dumb mistakes. If you don’t train it to destroy humanity – it won’t. So sleep tight – and stop stressing over AI.

So what do my colleagues think?…

Making predictions is really hard

First, let’s hear from a real expert in modern AI tech. Vladislav Tushkanov is the head of our AI Technology Research Center, and is deeply immersed in the AI field academically (so this gets rather technical):

“Right now, the main paradigm in AI is transformer-based machine–learning architecture – both textual and multimodal. It’s progressing fast – already small models outperform the giants from two years ago, and the hardware improvements (like Cerebras chips) are mind-blowing. But we don’t know how long progress in this paradigm will last, or, if it ends, whether we’ll find another path.”

Vladislav added that there’s no consensus on what exactly constitutes true artificial intelligence, and that there are no agreed-upon criteria to be able to one day declare it achieved. And he admits he’s been wrong many times before in predicting hardware advancement and LLM capabilities.

But, as to the will AI destroy humanity? question, Vladislav… dodges it. Make of that what you will (eek!).

Real AI might want to destroy us – but that’s still a long way off.

Next up: Sergey Soldatov, head of the Kaspersky SOC (Security Operations Center), defending both our infrastructure and our MDR clients. By the way, our SOC has used a machine-learning-driven auto-analyst since 2018 to reduce routine workload on human experts.

Sergey thinks that if “real” or “strong” AI is created, it will definitely… destroy humanity! Its motivation? Something like that of Agent Smith in The Matrix: “Humanity is a disease, a cancer of this planet, and we are the cure”.

It’s a grim outlook, but here’s the good news: Sergey believes we’re still far from building full-blown AI.

AI is already breeding people like rabbits.

Next, let’s hear from Vladimir Dashchenko, our industrial-systems cybersecurity expert – an active lecturer and speaker.

Vladimir boldly suggests two things:

a) AGI creation is just around the corner: tech is racing ahead in giant leaps.

b) According to U.S. data, most young people today find their partners through dating apps powered by neural networks. Thus, these AI technologies are already literally “breeding” humanity in the biological sense!

Stupidity, not AI, will more likely destroy humanity

Finally, a word from Igor (Gosha) Kuznetsov, the head of our Global Research and Analysis Team (GReAT).

Should we expect strong (or general) AI in the next few years?

We still lack the tech needed for real artificial intelligence. Today’s cutting edge (LLMs & Co.) aren’t far beyond an advanced parrot – just reshuffling and remixing pre-existing material mostly scraped from the internet. What’s the average knowledge level on internet forums, and how many mistakes – deliberate or accidental – are there?

Yes, this kind of AI may outperform a junior employee who hasn’t sifted through as many low-quality forums, or an assistant summarizing without understanding. But that’s where its “skills” end. A good analogy from offline life: a few years back, soft skills were sold as the universal key for every worker. But then it turned out that employees need real abilities – not just endless conversations and discussions of problems with zero solutions.

Could artificial intelligence destroy humanity?

Sure, if humanity decides to rely entirely on this “intelligence” and stops developing its own. A personal observation: many job candidates these days think that during interview testing (and later, in their job), it’s enough to feed tasks into a neural network to get answers. Meanwhile, media hype says junior staff can be replaced with AI. But then how do you grow experts if you don’t hire beginners?

Can a soulless assistant with probabilistic logic destroy you? Yeah, with some degree of… probability – if you delegate serious tasks to it.

Summary of opinions:

Will we get strong AI soon?

Me, Soldatov, Kuznetsov: no

Dashchenko: yes

Tushkanov (actual expert): it’s complicated

Will AI destroy humanity?

Me and Kuznetsov: no

Soldatov: it would if it existed – but it doesn’t yet

Dashchenko: it’s already breeding us like rabbits

Tushkanov: didn’t answer

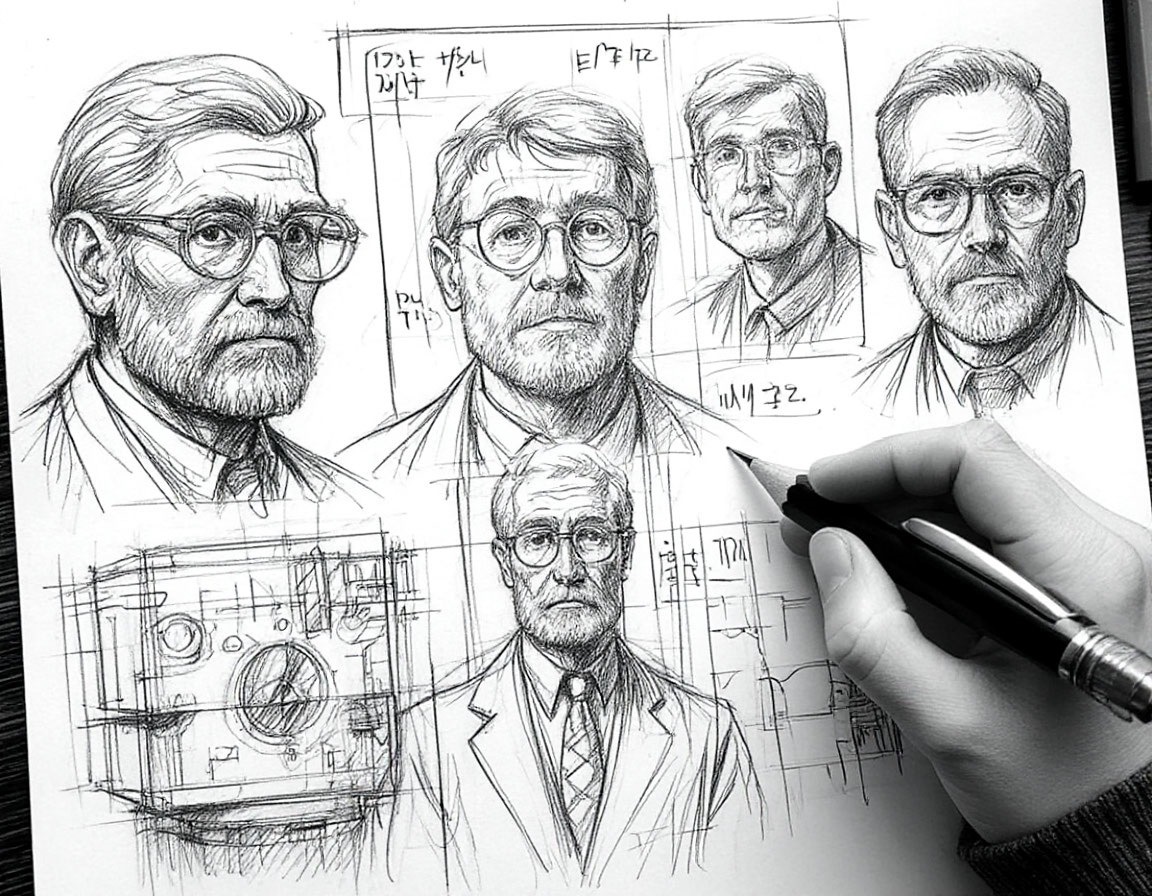

Btw: all images in this post were generated by a neural network.

And that same neural network asked me to pass along greetings and end with a poem it composed. Here it is:

Hey, humans of Earth!

Listen up right now:

AI generators

Will replace you anyhow!

The machine writes, counts…

Work in general – no big deal!

It even comes up with poems,

And they’re not all that bad – for real!

Love? AI’s there too:

Like an electronic friend.

It generates emotions and feelings

Like summer raindrops that descend!

And us? What about us?

We’ll chill a lot more, my friend!

Less work, more taking it easy,

Free as birds – forever and ever: no end!

No more plowing,

No more sowing:

AI can do it all –

And it’s still growing!

The new age is knocking loud –

Open your eyes!

The machine is man’s friend,

Not his demise!