January 27, 2012

We’re AV-Comparatives’ Product of the Year!

A big hello from Austria!

This trip, not to mention this year, has got off to a very good start – we have been named AV-Comparatives’ Product of the Year!

Here’s my take on the world of AV testing.

1. Why bother with tests for antivirus (in the broad sense of the word ‘antivirus’) programs? Well, first off, so that your average (and not so average) user has some authoritative reference point when it comes to choosing personal (or corporate) IT protection. Secondly, it means some big mouth cannot just claim that their product is “the dog’s bollocks” – they have to put their money where their mouth is! You have to separate the glitzy marketing from the technological reality. And, of course, so that any changes in the quality of protection can be tracked over time – to see how quickly different vendors react to new IT threats and how long they can keep up with the pace. And thirdly, so that the makers of security products don’t start slacking off, but keep their solutions at the cutting edge.

2. Tests vary. There are proper tests that emulate real-life conditions, real infected sites and the behavior of average users. These tests are very expensive and are conducted once in a blue moon. That’s why the more widespread tests involve “simulations” of infected environments and do not cover all of a security product’s features but only some of them. For example, there are detection tests using collections with millions of files, heuristic tests, tests that check how well a product can treat an infection etc. Then there are paid tests for PR purposes, the results of which depend not so much on the quality of the product but rather how unscrupulous and greedy the tester is (and yes, this sort of thing goes on).

3. One of the best known antivirus testing labs is the Austrian team AV-Comparatives under the leadership of Andreas Clementi (he’s the guy in the photos below). All sorts of tests are carried out here – “proper” ones that emulate real-world conditions as well as “partial” ones that test different antivirus features. Altogether there are at least 10 different tests, the results of which determine the Product of the Year. The prize for 2011 was awarded to us! It’s the third time in AV-Comparatives’ history that we’ve won the award (although it would be better if we won it every year…).

That’s us – Andreas, Nikita (head of our anti-malware sesearch) and myself.

Then, of course, there’s the immediate media response.

This is what the AV-Comparatives laboratory looks like. At first glance it looks pretty understated, but the place is packed full of equipment.

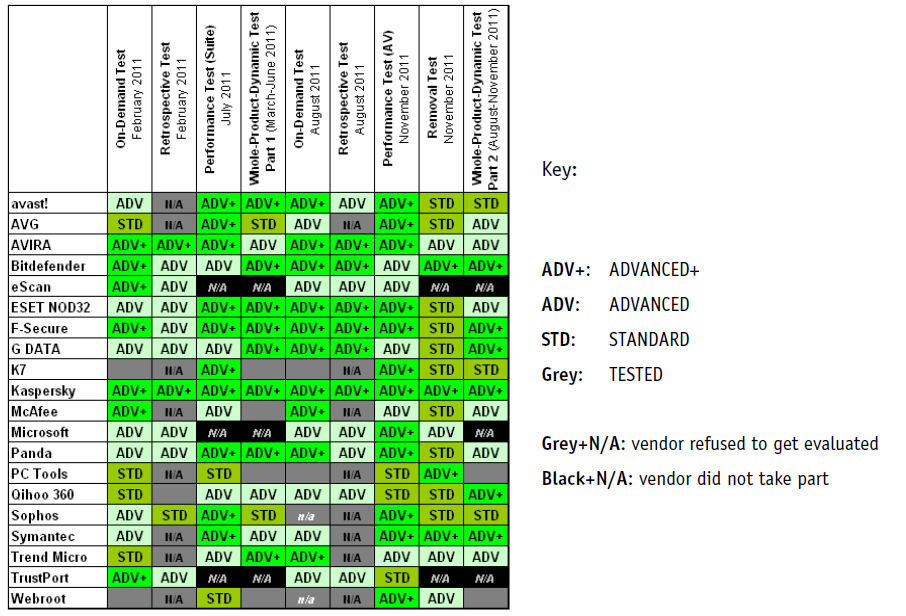

And here are AV-Comparatives’ test results for the year.

You can see the rest of the photos here.

4. Other awards in 2011. We worked damned hard and the end of the year brought a flurry of industry prizes:

4.1. Another Andreas, Andreas Marx, is in charge at the AV-test.org test centre in Germany. On that front KIS and our new corporate product KES swept the board. And it wasn’t just any old victory, but an absolute record for the tester and for all vendors! KIS achieved a final score of 17 while KES scored 17.5 (out of a possible 18 – the industry average was 13.2). By the way, the tests conducted by AV-Test.org are followed closely by a number of computer magazines. For instance, PC Welt compiled a top 10 that shows who scored what. We topped the list a full 1.5 points ahead of second place. That is some result! You can read more about the tests here and here.

4.2. The latest (December) VB100 for KIS and KES. No, it’s not just another gong to add to our burgeoning collection; it was the first test conducted under new rules that immediately got rid of the “dead wood”. Now the testing is split into two parts – the obligatory part (new features: detecting from the WildlistExt collection, plus on-demand scanning with a working cloud) and the representative part (RAP tests, which don’t influence VB100 certification). Respect to VB – it doesn’t rest on its laurels, and even gives those who are more interested in test results rather than real-life protection some hard time.

4.3. We also took first place on the podium in the German magazines Computer Bild and PC Welt, and so it goes on…

5. Yes, as in sport, we don’t always reach the finish line first. Sometimes that’s our fault (it’s being sorted), and sometimes it’s the fault of the testers (we’re trying to explain to them that they need to modify their testing methods).

To sum up, the testing industry and participation in those tests is, in fact, very much like sport. To win, you need to train hard. To avoid disqualification, there should be no performance-enhancing drugs (it is an issue in the AV industry), and really hard work doesn’t always guarantee victory… But what a great feeling it is to stand on top of the podium!